Cyabra’s latest research reveals a sophisticated disinformation campaign surrounding the recent helicopter crash in Iran, involving fake profiles on social media. The crash, which caused the death of Iranian President Ebrahim Raisi, has sparked heated online discussions, in which fake profiles have been promoting a conspiracy theory, reaching the eyes of billions of people and promoting false narratives.

TL;DR?

- 22% of X accounts discussing the Iran helicopter crash are fake.

- Two fake campaigns, one mourning Raisi and one celebrating his death, reached a potential 6 million views.

- A fake news theory blaming Israel for the crash reached a potential audience of 4 billion.

Disinformation Campaigns in Action

Fake profiles infiltrated authentic conversations about the helicopter crash involving Ebrahim Raisi almost as soon as his death was announced.

These profiles were able to reach over 6 million potential views and generate 3,000 engagements in just two days.

The fake accounts exhibited coordinated activity, showing similar and even identical behavior patterns and promoting one of the two narratives:

Raisi as a National Hero

30% of these fake profiles amplified the narrative that Raisi is a national hero and martyr, portraying Raisi in a positive light and emphasizing his heroic qualities. The profiles praising Raisi spread their message using the hashtag #شهید_جمهور (#countrymartyr).

Raisi as a Monster

A similar fake campaign spread by opponents of Raisi used fake accounts to frame the crash as symbolic of his downfall, describing him as a monster and as “The Butcher of Tehran” (a name given to him after he committed mass executions of political prisoners in 1988). The profiles also mentioned Raisi was “going to hell” .

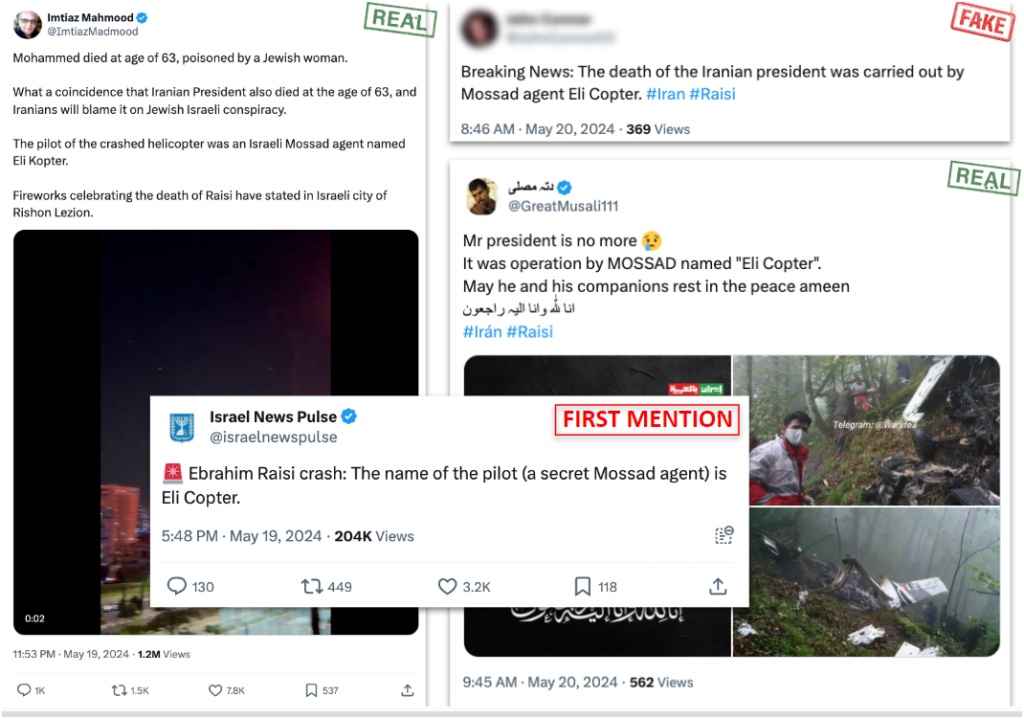

The Conspiracy Theory of Israeli Mossad Agent “Eli Copter”

Cyabra also uncovered a fake news campaign that pushed a conspiracy theory about Israel being responsible for the crash. This theory started as a joke on Israeli social media, with users humorously attributing the crash to a Mossad agent named “Eli Copter” (Hebrew wordplay on the word “helicopter”). However, the joke quickly evolved into a serious accusation.

The account @israelnewspulse first posted the idea as a joke, but it was influencer @ImtiazMadmood who presented it as a real and gained a reach of 1.2 million views on a single post.

While as other authentic Influencers continued to spread the message, fake profiles picked up the theory, making it spread across all social media platforms, and eventually reaching a potential audience of 4 billion viewers. While many people tried to explain the joke, the fake news kept spreading.

The Impact of Fake Profiles

The massive presence of fake profiles in online conversations about significant events like the Iran helicopter crash, as well as their massive influence, highlights the growing threat of disinformation and fake news spread by inauthentic accounts online. As we have witnessed, these fake profiles can manipulate social media discourse, spread false narratives, and have a huge impact on public perception.

For those responsible for managing the online reputation of parties involved in high-profile global events like the Iran helicopter crash, here are strategies to consider:

- Monitor online conversations for fake campaigns and fake profiles, and alert the platforms of inauthentic accounts spreading fake news and conspiracy theories.

- Debunk the conspiracy, surface and promote authentic narratives from positive, authentic profiles to counteract the negative impact of fake accounts.

- Implement ongoing monitoring to detect and respond to new fake accounts and disinformation campaigns promptly.

The disinformation campaign surrounding the Iran helicopter crash underscores the importance of vigilance in detecting and combating fake profiles and fake news. Cyabra’s findings reveal how these malicious activities can shape public discourse and spread false narratives on a massive scale. For comprehensive solutions to protect online discourse from those threats, contact Cyabra.