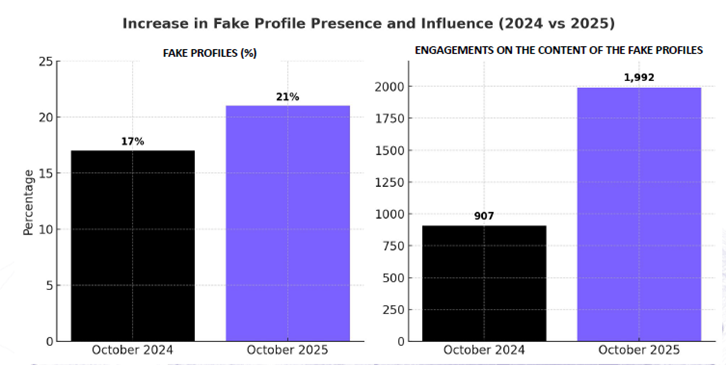

Cyabra’s analysis of climate change discourse revealed a disturbing shift in disinformation tactics between October 2024 and October 2025. The analysis, which tracked the activity of fake profiles in climate change discussions across major social media platforms, revealed that while the number of fake accounts in climate conversations increased from 17% to 21% between 2024-2025, their engagement has skyrocketed by 119%.

These coordinated networks strategically target scientific content with synchronized tactics, creating the false impression of widespread climate skepticism. Government agencies can counter these tactics through monitoring, rapid response capabilities, network mapping, and AI-powered detection tools to protect public discourse from manipulation.

TL;DR

- Fake account engagements more than doubled in one year, surging 119% and creating disproportionate influence

- 21% of accounts in climate conversations were fake in October 2025 (compared to 17% in 2024), showing the rise of coordinated efforts aimed at undermining climate science

- Inauthentic accounts focus on “data-based” narratives, creating an echo network that artificially amplifies #ClimateScam, #ClimateHoax, and #ClimateCon hashtags

- Fake profiles now integrate directly with authentic science communicators, operating across multiple conversation clusters to create an illusion of grassroots skepticism

The Numbers Behind Climate Disinformation

Cyabra’s analysis revealed that fake accounts have established a sustained network, rather than operating in isolated campaigns. They maintain constant visibility of climate-denial narratives by strategically positioning themselves within authentic discussions, creating an artificial sense of widespread skepticism.

In tandem with fake accounts growing from 17% to 21% between 2024 and 2025, even more disturbing was the rise in their impact: Engagement received by fake profiles more than doubled over a single year, surging 119% in 2025, and indicating more than a simple increase in numbers: fake profiles have become dramatically more influential in shaping climate narratives.

In terms of reach, these fake accounts consistently generated content that garnered more than 630,000 potential exposures in both 2024 and 2025. The consistent reach indicates increased efficiency in their distribution methods.

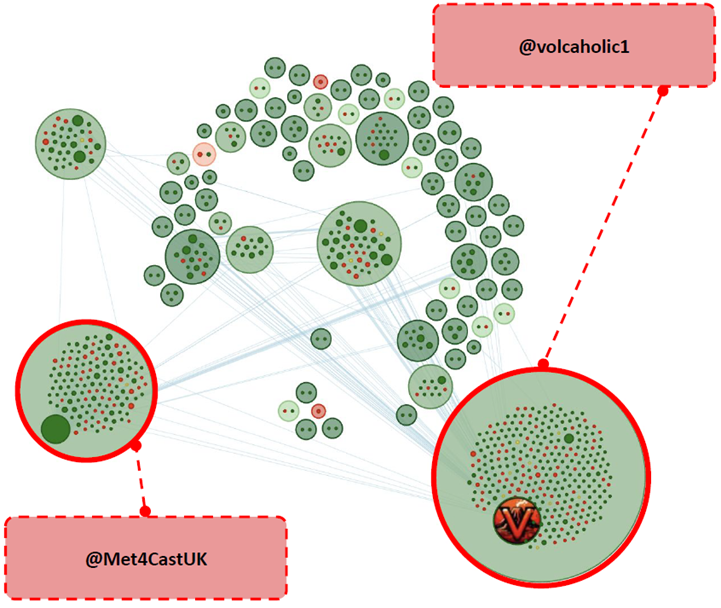

Particularly notable was the targeted infiltration of scientific discussions and engagement with climate influencers: Cyabra found that 27% of the profiles engaging with the weather account @volcaholic1 and 27% of the profiles engaging with @Met4CastUK were inauthentic, demonstrating how fake profiles strategically spread misleading content about scientific data and extreme weather events.

Sophisticated Coordination Tactics

Cyabra’s investigation uncovered several sophisticated coordination tactics used by fake profiles:

- Multiple inauthentic profiles engaged with targeted content within minutes of each other

- Identical messaging deployed across different community clusters

- Strategic positioning across diverse audience groups to spread consistent narratives

These accounts specifically targeted posts containing scientific data and graphs, deploying dismissive and sarcastic comments designed to discredit the evidence of climate change. By targeting posts with charts or scientific framing, fake accounts strategically undermine trust in climate science where it should be strongest – in data-driven discussions. Their consistent presence across different posts, hashtags, and time periods indicates a sustained network designed to keep climate-denial narratives constantly visible and seemingly credible.

The three most prominent hashtags remained consistent across both years: #ClimateScam, #ClimateHoax, and #ClimateCon formed the core of the recurring disinformation network. While central themes remained stable, the surrounding network became denser and more interconnected in 2025, showing stronger ties between different communities.

The Broader Implications of Climate Disinformation

Cyabra’s report demonstrates how inauthentic accounts have evolved from peripheral participants to central conversation actors. The 119% spike in fake engagement represents a concerning evolution in disinformation tactics, showing how a numerical minority (21% of accounts) creates disproportionate influence through coordination. While authentic discussions continue, the artificial amplification of skeptical viewpoints distorts the public’s perception of scientific consensus.

The United Nations has recognized that coordinated campaigns increasingly impede climate progress through denial, greenwashing, and scientist harassment. This shift has significant implications for public discourse and policy development. These fake networks, that make strategic use of sophisticated tactics such as strategic manipulation of climate denial hashtags, undermine government climate policy by fabricating the appearance of grassroots opposition.

Proactive Strategies Against Disinformation Networks

Government communicators should watch for warning signs that may indicate coordinated inauthentic behavior, and implement several proactive strategies:

- Monitor climate hashtags and influencers to identify unusual engagement patterns that may indicate coordinated campaigns. Pay particular attention to timeframes coordination – multiple accounts engaging within minutes of each other.

- Leverage AI-powered detection tools to identify coordinated inauthentic behavior, and develop rapid response capabilities that counter misleading narratives with accurate information. The Science Feedback Climate Safeguard demonstrates how AI tools can track climate misinformation.

- Pre-emptively map known disinformation networks to understand their structure and amplification tactics. Cyabra’s analysis shows these networks maintain consistent messaging while evolving their distribution methods.

- Focus on network-level responses rather than addressing individual accounts or posts. The power of these campaigns lies in their coordinated activity and strategic positioning.

The dramatic surge in the impact of fake accounts in just one year demonstrates the urgency of implementing robust monitoring and response systems.

By analyzing network connections, engagement patterns, and account authenticity, government agencies can distinguish between organic discussions and artificial amplification campaigns, and protect climate policy discussions from artificial manipulation and disinformation attacks. Contact Cyabra to learn more.

Download the full report by Cyabra

Key Questions – and Answers

Government agencies seeking to safeguard climate policy discussions should be on the lookout for warning signs that may indicate coordinated inauthentic behavior, such as:

* Accounts engaging within short timeframes of each other

* Identical messaging appearing across different conversation clusters

* Disproportionate engagement levels compared to account numbers

* Strategic targeting of data-rich or scientific content

Government agencies seeking to safeguard climate policy discussions should implement:

* Network pattern monitoring: Coordinated behavior is the key indicator of disinformation campaigns. Tracking synchronized engagement can reveal networks even when individual accounts appear legitimate.

* Hashtag surveillance: The persistence of core climate denial hashtags provides monitoring opportunities to identify new campaigns early.

* Cross-platform analysis: Comprehensive monitoring requires visibility across multiple platforms.

* Data-driven communications: Government messaging should address disinformation tactics directly while providing accessible, evidence-based climate science information.

Fake networks are likely to lean more heavily on AI-generated personas, faster coordination across platforms, and more polished messaging that blends seamlessly into scientific discussions. We may also see short, high-intensity engagement bursts timed around major climate reports or extreme-weather events. These shifts will make fake networks harder to spot and require agencies to strengthen real-time monitoring and anomaly detection.

Scientific posts, charts, weather updates, and data-driven threads are prime targets because they shape public understanding of climate evidence. Influencers who share real-time climate information also attract coordinated activity. Agencies should prioritize monitoring these high-impact areas, especially during major climate events or policy announcements when disinformation networks typically intensify their efforts.

Disinformation spikes most sharply during crises: heatwaves, floods, wildfire seasons, or the release of major climate reports. Agencies should establish rapid-response protocols that include real-time monitoring of key hashtags, early identification of coordinated behavior, and pre-approved factual messaging that can be deployed quickly. Preparing before these high-pressure moments ensures agencies can counter misleading narratives before they gain momentum.