Covid-19 conspiracy theories have existed for almost as long as the pandemic itself, and spread into mainstream media towards the end of 2020, with the mass production and worldwide distribution of Covid-19 vaccines.

At the end of 2022, two years after most of the world went back to near normal, “Died Suddenly”, a new film which factcheck.org called “Misinformation masquerading as documentary”, started trending on social media. The film claimed Covid-19, as well as the vaccinations protecting against it, were all manufactured as part of a plot to depopulate the world.

The analysis of the #DiedSuddenly conversation is covered in two posts; the first on how Cyabra sized the conversation and tracked its amplification as well as identified the authentic and inauthentic profiles, and the second on how NOW Affinio uncovered both its authentic and inauthentic stakeholders.

The Anti-Vaxx Movement Moves

The anti-vaxx movement is nothing new. Actually, historians claim it’s been around for hundreds of years, ever since the first vaccine was invented. However, in the last ten years, we’ve witnessed social media being used to spread and amplify those messages, which usually come hand-in-hand with loads of conspiracy theories.

As a result, more people than ever are regularly and constantly exposed to those misinformation and disinformation campaigns, and as any Sociology freshman will tell you – the more you see it, the more susceptible you are. The film “2000 Mules” is another example of a long-debunked conspiracy theory that carved a path into mainstream media.

Who Lives, Who Dies, Who Twists Your Story

“Died Suddenly” premiered on rumble.com on November 21, 2022. During the month following its release, Cyabra tracked the use of the #DiedSuddenly hashtag, and found 3,827 profiles were using it, with deeper analysis showing most of them were supporting the movie’s conspiracy theory and promoting the idea of Covid being a government-organized depopulation scheme.

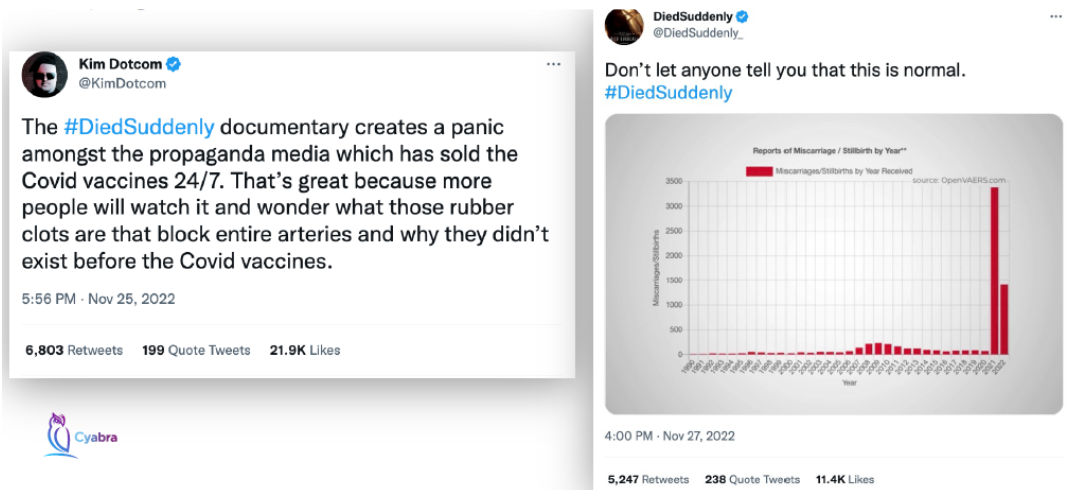

With just an initial thousand people mentioning the movie hashtag in late November through December were enough: two specific posts that used the hashtag reached the eyes of 24 million profiles. Both of the posts focused specifically on the alleged increase in miscarriages, particularly among vaccinated pregnant women. The graph displayed on the film’s own Twitter profile was apparently created by an unverified source. The other profile, Kim Dotcom, is a controversial German-Finnish political activist, who’s been known to support the anti-vax movement, and encouraged his followers on Twitter to spread the movie’s ideas before it even came out.

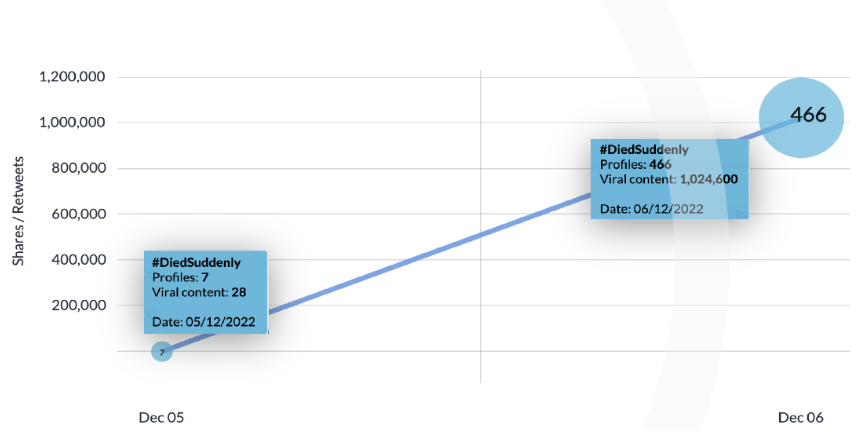

This spread is not unusual: posts can start small and gradually trend higher and higher. But this trending hashtag seemed to be spreading very quickly: between December 5 and 6, the number of #DiedSuddenly mentions increased by 6,557%: 466 profiles were using the hashtag, and were responsible for 1,024,600 retweets (compared to 28 retweets by 7 profiles on the previous day).

This content might have been pushed by authentic profiles, but continued spreading due to fake ones. Between December 15 and 16, Cyabra uncovered another surge of mentions and retweets, with a 554.69% increase in inauthentic activity.

As mentioned above, Covid-19 conspiracy theories have been around for years now, and while the pandemic appears to be under control in many parts of the world, conspiracy theorists have been known to latch onto any obscured news and amplify it.

22% of the profiles in the #DiedSuddenly conversations during December were inauthentic, and overall, inauthentic profiles led 11% of the conversations scanned by Cyabra.

Conspiracy theories thrive on providing a sense of comfort, to find “reason” in random occurrences, especially tragic ones. As a result, people in vulnerable situations are frequently taken advantage of by scammers, both in person and on social media. Here, we’ve witnessed an organized disinformation campaign, with bots exploiting grieving people to promote harmful propaganda. A cautious reader might have rolled their eyes when they came across an anti-vaxx message spread by fake users, but they will not be as wary with an authentic message, even if it was supported by inauthentic profiles taking advantage of its presumed credibility to achieve massive reach.

Read the second part of this analysis, to learn how NOW Affinio, using Cyabra’s analysis of authentic and inauthentic profiles, segmented each group of profiles.

The Power of Two Lenses on Social Context

Cyabra and NOW Affinio have partnered together to be able to bring nuanced insights to social conversations – whether around trending movies like “Died Suddenly” or anything else. As audience authenticity experts, Cyabra brings the ability to identify engaged profiles and tag them as real/fake. As affinity-based audience insights experts, Affinio uncovers like-minded groupings among profiles to understand those interests that bind them. Either platform can be used as a starting point to study an audience.

Reach out to Cyabra or info@affinio.com to learn how you can gain more clarity on your stakeholder voices.