The introduction of GenAI altered the online threat landscape significantly. Bad actors have long ago learned to harness social media for their needs – conducting social engineering attacks, engaging in identity theft and impersonations, and spreading disinformation and fake news to manipulate social discourse. Since GenAI was introduced to the public in the past year, it has been adopted as a new weapon in the bad actors’ arsenal – one they are increasingly becoming better at using. This is only the beginning of what will be an unprecedented change in the realm of social media threats.

This article will explain the different methods through which malicious actors utilize GenAI for their purpose.

The ABC of Disinformation

Let’s go back to basics: in the world of threat intelligence and information warfare, there are three essential elements:

- Actors – those orchestrating the attacks, operating fake accounts to manipulate public discourse.

- Behavior – the type of social engineering tactics the bad actors use: manual or automated, impersonations or phishing, fake news or spam.

- Content – the type of content created and used by bad actors, which varies from platform to platform (text / images / videos) and the narratives created by them.

Now, let’s see GenAI’s huge effect on each of those components:

Actors

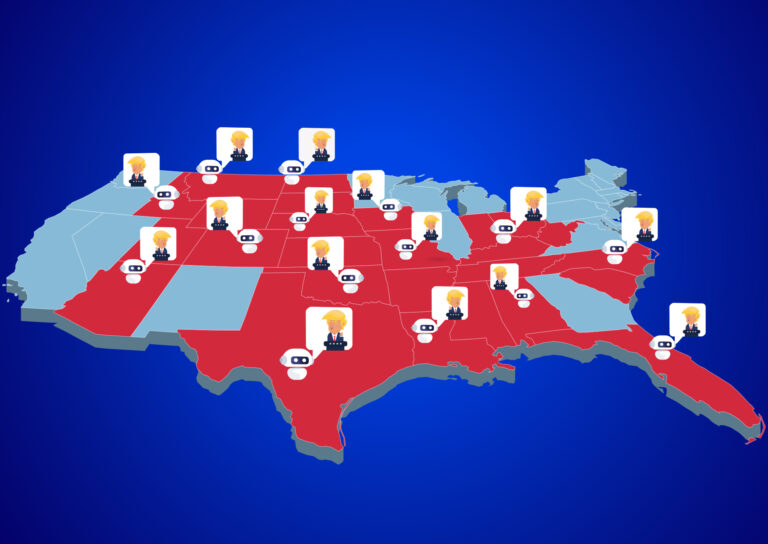

When it comes to the actors, much of the creation of fake profiles is already automated, utilizing bot farms which can swiftly create a mass of fake accounts on every social media platform. However, GenAI increases their effectivity: while in the past, bad actors had to use generic stock images for profile pictures, making them look unreliable, or steal a real person’s photo and risk being caught as impersonators, nowadays GenAI image generators answer their needs. Images produced by generators like “This Person Does Not Exist” look more authentic than ever, and some of them will also produce other photos with the same made-up person, supplying the new fake profile with visual history, which makes it look even more credible. There’s no need for creativity in the naming department: GenAI content generators can supply you with a variety of fake names that sound modern, local, and age-appropriate. Would you like to create a community of American college boys? Ukrainian elderly women? Chinese children at the age of 10 to 13? GenAI’s got you covered.

Behavior

While bad actors still manually operate a sock puppet to better control the message, especially in the crucial times at the beginning of a fake campaign, most fake profiles online are actually bots – and they are the most effective method of influence operations. Bot networks’ power is in their numbers – thousands of fake profiles can lay dormant until the moment they are given the order to flood social media conversations with propaganda and fake news.

When it comes to behavior, bad actors’ work is easier than ever: while in the past automated bot networks could only post, reply and repost using the same texts, they are now able to engage in conversations with themselves and with real profiles, using AI-generated texts to interact and respond in a natural manner and adjust the message to different audiences. It’s also getting increasingly difficult to tell them apart from human-created content.

Content

GenAI is unfortunately sharing and repeating misleading content even without the bad actors’ assistance, due to its algorithm. The World Health Organization has warned against misinformation in the use of AI in healthcare, and GenAI companies are in constant struggle with the algorithm’s “hallucinations” (misinformation typically generated by large language models).

Still, there is no denying that GenAI content changed the game for quite a lot of people. In recent months, we have seen how GenAI can be an asset for marketing teams, writers, developers and others, saving time and helping them do their work more efficiently.

Of course, GenAI has an even bigger value for the bad actors. Content is where GenAI’s abilities truly shine: any bad actor now has access to creating millions of texts, images, or videos (Deepfake and others) with one single click, and can use all those new content types to promote and leverage any agenda they desire. Just a few months ago, a fake GenAI image of the Pentagon exploding spread through X (Twitter) like wildfire, causing S&P 500 to drop by 30 points, and resulting in a $500 billion market cap swing. Other examples have popped up since, including a fake image of former president Trump being arrested, a crocodile in Florida street following a flood, fake news sites spreading misinformation, and more. On the other hand, other content that was entirely real was accused of being fake, like President Biden speech following the 6 January riots. We can expect to see many more of those visual and textual scams in years to come, as well as much more confusion and mistrust regarding real images and videos.

What’s to Come, and How to Defend Against It

It’s been almost a year since ChatGPT became available to the public, and was soon followed by many others. For better or worse, it already has a huge impact on our lives. But in the hands of bad actors, GenAI is a dangerous tool that can cause massive damage – to companies, to the stock market, to governments, and to everyone. GenAI text models currently work best in English, but will soon be able to generate a similar level of quality content in other languages.

While we already have trouble telling GenAI apart from human-created content, it’s important to remember GenAI will just keep improving. This is why Cyabra created botbusters.ai, a free-to-use tool to help the public detect GenAI text and images, and identify fake profiles.

Defensive technology will always be, by its very definition, one step behind the bad actors. This is why it’s crucial that the private and public sectors make use of advanced tools with the ability to monitor social media for threats, detect GenAI content, and identify fake accounts. If you’d like to learn more, contact Cyabra.