Here’s a fact: fake profiles are expanding – not only in volume but in impact. While in the past, creating a fake profile required manual labor, now bot networks can be either bought or created with one click, giving any person the power to operate thousands of fake profiles and manipulate social media discourse. While in the past, this was mostly a public sector concern, nowadays many of those new bot networks and fake profiles are targeting the private sector, effectively spreading brand disinformation.

Why do our existing PR, communications and marketing teams struggle with this problem? What tools and skills are needed to deal with this destructive evolution of technology? And do we need a new role in our organization to tackle those threats?

The Destructive Evolution of Fake Profiles

With GenAI coming into our lives, fake profiles have tremendously improved their ability to appear authentic. GenAI is being fully utilized by the handlers of fake profiles: Image generators like “This Person Does Not Exist” are used to create unique artificial profile pictures, vacation photos, and other “memories” with the same fake person. Text generators are used to generate thousands of posts and interactions with one click while making the fake profiles sound native and local. In the past year, GenAI has been used to create supporting fake content – fake websites, magazines, blogs and news sites. Other AI generators can create every demographic characteristic a fake profile requires – local names, emails, addresses, phone numbers, etc.

While those profiles look authentic, their real power is in size and number. They never tire or stop, and they can post hundreds of times daily while infiltrating authentic communities on any social media platform.

Combined with AI tools, fake profiles are destructive. Until recently, the private sector was free of those concerns – state officials and government institutions were the ones kept up at night. But in the past year, brand disinformation has been dominating the discourse on social media, causing massive reputational damages.

What is Brand Disinformation?

While the term “disinformation” is usually associated with societal issues, such as elections, protests, or civil unrest, brand disinformation happens when fake profiles involve themselves directly in discourse related to companies and brands, and are used to manipulate it.

There are two types of brand disinformation attacks:

- Brand-Related Coordinated Campaign: An attack directly aimed to damage your brand’s reputation. It could be related to a business decision you made, a stand you take (“Go Woke, Go Broke”), a presenter you choose, a DEI policy you practice, an unfortunate marketing campaign that looks too similar to controversial issues, or even a sector-related crisis.

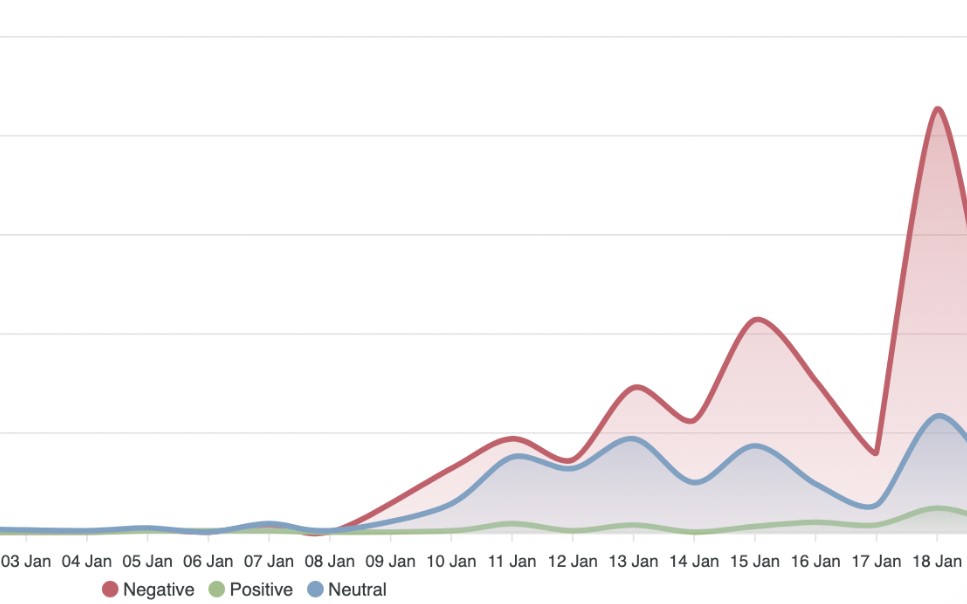

In these attacks, your brand is the star. The perpetrators can be competitors, disgruntled customers, someone who doesn’t like your brand values, or others seeking to cause harm. Mostly, bots involved in direct attacks would either be present in the discourse from the very start, driving, or even initiating the attack. Other times, they would wait for the negative discourse to pick up so they could start promoting and spreading it. They would engage with each other and with authentic profiles, try to infiltrate authentic circles, and share authentic profiles’ content (which, as mentioned, they can do hundreds of times per day).

- Collateral Attack: An attack that is exploited by bots for its virality. This attack usually happens when a company starts being discussed negatively on social media. The moment a negative hashtag or a critical conversation starts picking up, bots that were originally created for a completely different purpose would latch onto the topic or hashtag for its virality, and use it to promote their operator’s agenda – for example, pushing a candidate in a country’s national elections. Using trending hashtags, those bots elevate their content’s exposure and engagement to new heights.

While having nothing to do with your brand, those attacks harm your reputation because fake profiles are pushing the negative narratives aimed against you and spreading them to countless eyes. Cyabra has identified this phenomenon happening to fashion brands, financial institutions, ride-hailing apps, and many others.

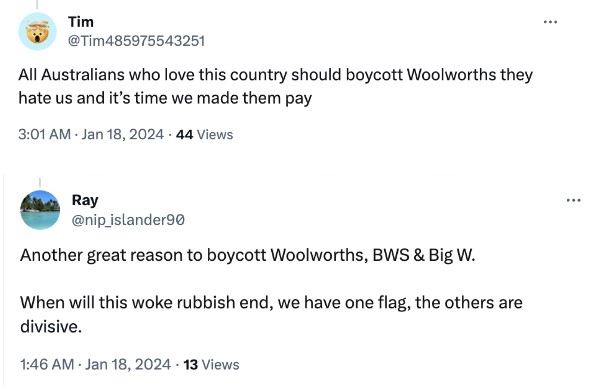

A subcategory of this type of attack is bots using negative hashtags simply to appear active and gain credibility by having a lively timeline. Those posts are nothing but spam, but due to the clever use of currently trending hashtags, they can easily reach thousands of views while utilizing a negative hashtag attacking your brand. Check out the posts below:

Defensive Communications: The Role You’re Missing

While those two attacks are very different, they have something in common: bots amplifying negative discourse, turning what might have been a passing trend into a massive PR crisis.

Your great PR team and their amazing crisis management skills don’t have the tools to face this attack. Neither does your excellent customer service. The bots are either there forever, or at best, until the crisis dies out. They won’t be pacified by promises, apologies or coupons.

Marketing, PR and communications teams are all regularly – and unknowingly – wasting time and money dealing with fake profiles. You might be wasting time answering disgruntled customers, unaware that you’re interacting with bots. You might be responding to what seems like a massive crisis but actually consists mostly of bots, while your real potential audience is not as negative towards the brand as it seems. You might be basing your whole campaign strategy on brand disinformation and fake trends.

2024 is the most significant election year in history, with over 4 billion people casting their votes worldwide. This means bots and fake profiles are more present than ever on social media platforms, and your teams lack the training and tools to handle them. In the public sector, these types of attacks are dealt with by the intelligence units and analysts. Now that those threats have surfaced in the private sector, brands are missing the link that connects cybersecurity with PR, marketing and communications. This is a new role that can only be defined as Defensive Communications or Defensive Social Media. It involves monitoring social media platforms, identifying potential threats or attacks, raising awareness or alarms about these threats, and implementing strategies to mitigate or counteract them.

To learn more about the skills, education, tools and training needed for defensive communications, and to supply your team with the ability to detect fake campaigns, identify bot networks, and respond in real-time, contact Cyabra.