This blog post explores the 5 different ways in which bad actors utilize GenAI to make disinformation spread more easily and effectively, and explains why, with the help of GenAI, fake news and propaganda have become more detailed, layered and credible-looking.

1. GenAI Helps Create Bots That Look Authentic

Even before GenAI came into our lives, creating an intricate bot network was already a task that could be created at scale using machine learning. In the past, however, we could easily tell those bots apart from authentic profiles: they had generic profile pictures (if they had one at all), they were all created on the same day, they had no friends, or they had only friends with similar, sometimes identical profiles, and they were only posting about the specific topic they were created for.

When GenAI technology was implemented into bot networks, it changed the game.

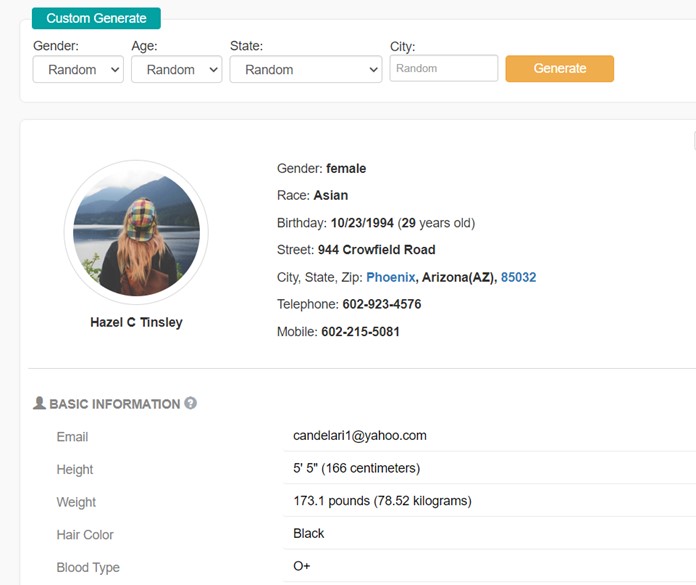

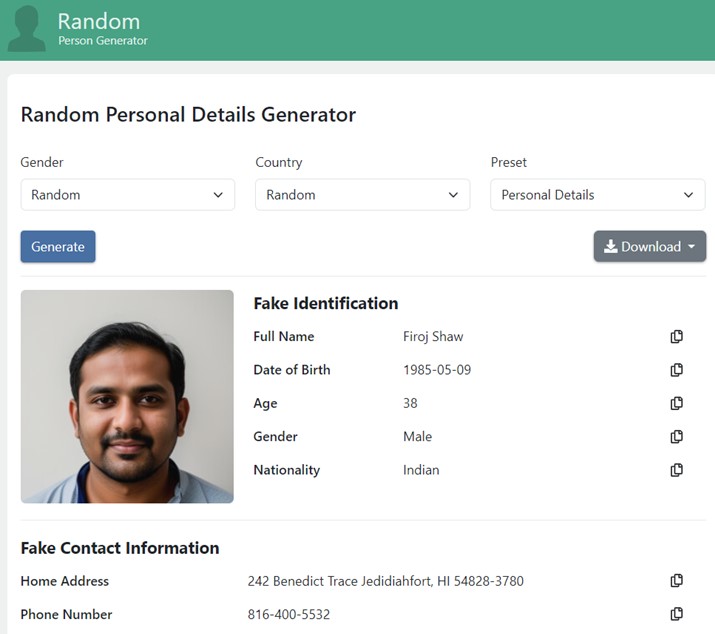

The most basic parameter, a distinguishable profile picture, was one of the easiest to accomplish: bad actors no longer need to go through stock banks of images or steal a profile picture from a real person and risk being caught. GenAI image generators like “This Person Does Not Exist” can produce photos of different people with unique features and flaws, making them look more authentic than ever. A bad actor can create a full community of fake profiles of any gender, age, color, or nationality, and even use a GenAI “person generator” for authentic-sounding names, as well as other personal details like birthday, address, phone number, and even blood type.

2. GenAI Can Fabricate Interests and History

The challenge of creating a real-looking fake profile doesn’t end with name and photo. Many social media users are more savvy about fake profiles, and would be suspicious enough to check for classic signs of a fake profile, like recent creation date, number of friends, and most of all – history. A profile that was created two years ago, but laid dormant until it started tweeting about a crucial trending matter last week is a good indicator of a fake profile. But if the profile was active and shared unrelated content – for example, if they spoke about their favorite TV show or football team, or complained about college or work – they are much more likely to be classified as real. All of this personalized content can be created using GenAI text generators like ChatGPT and Bard. In addition, GenAI image generators can create more than just one fake profile picture: they can provide a whole gallery of photos featuring the same person to accompany it. Those methods allow bad actors to can easily manufacture full records and a history for any fake profile.

3. GenAI Overcomes the Language Barrier

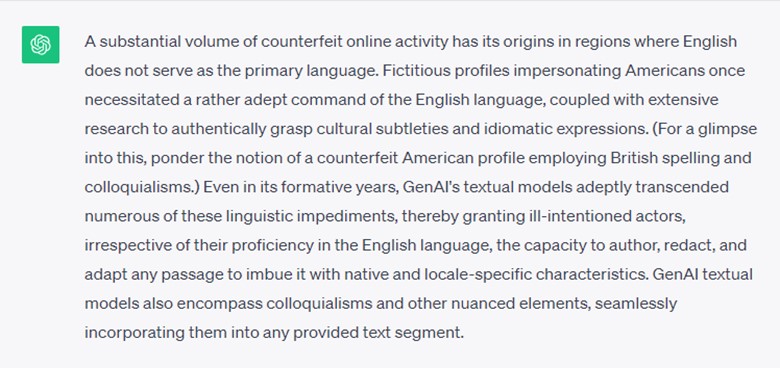

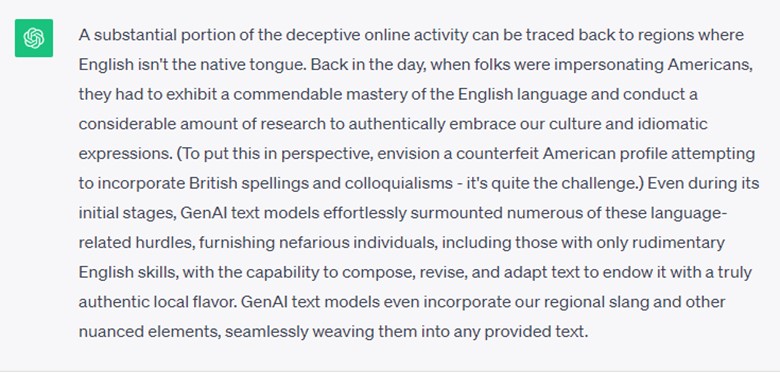

A substantial amount of the fake activity online originates from countries in which English isn’t a native language. Fake profiles pretending to be Americans once required fairly good English skills as well as a decent amount of research to account for culture and slang (If you wonder why, imagine a fake American profile using British spelling and idioms). Even in its early days, GenAI text models easily jumped over many of those language barriers, giving bad actors with average English, or even with basic one, the ability to write, rewrite and adjust any text to make it sound native as well as local. GernAI text models also include slang and other nuances and can incorporate them into any given paragraph.

Check out this very paragraph you just read above, rewritten by ChatGPT to sound more British:

And here is the same paragraph rewritten to sound more American – notice the subtle differences:

GenAI can bypass the language barrier in any language. But it does more than that: its vast data sets offer the ability to test and adjust the message in every style and tone, therefore making it look more personalized and trustworthy.

4. GenAI Can Build a Fake Community

Creating a good disinformation campaign is not just about a mass of diverse, reliable-looking fake profiles. It’s also about the connections between them: at the beginning of every influence operation campaign, fake profiles interact with each other as well as with genuine profiles, taking advantage of the algorithm to echo and spread their messages.

The implementation of GenAI into bot networks greatly affected this ability: Where once a fake profile would be jumping into a conversation and sharing either a message that has nothing to do with the topic or a link with no context, now those bots are able to use the same GenAI models that analyze a user’s question to predict the wanted answer, making the discussion sound more coherent. Authentic profiles find themselves unwillingly wasting time arguing with bots, while the bots themselves can keep interacting with one another endlessly, making this echo chamber even more effective and raising the conversation’s trending potential.

5. GenAI Supplies Added Layers of Content

Finally, as every bad actor knows, a disinformation campaign never stands on its own. It’s always part of a bigger strategy – tarnishing a company’s reputation, affecting election results, changing public opinion on any matter. This means the campaign can always benefit from more content – for example, fake blogs, magazines, or news sites supporting the same claim as the fake profiles, filled with other types of content to appear authentic and trustworthy. There’s no need for a writer to compose those dozens or even hundreds of content pages – within moments, any GenAI text generator can produce hundreds of fake articles, stories, and snippets.

Fake profiles can spread fake news and create chaos, mistrust, or confusion. However, when combined with a GenAI-created site that looks legitimate and validates their fake agenda, they become a weapon of mass disinformation.

This blog series delves into the risks of GenAI, and explains why it’s crucial that the private and public sectors make use of advanced SOCMINT and OSINT tools to monitor social media for threats, detect GenAI content, and identify fake accounts. This is the second part in Cyabra’s GenAI series, explaining why this new technology, that came into our lives in the past year, makes the work of bad actors so much easier. In the previous story, we explained why bad actors’ find GenAI so appealing, and the connection between GenAI and the ABC of Disinformation (Actors, Behavior and Content). If you’d like to learn more, contact Cyabra.

As always, we recommend using our free-to-use tool, botbusters.ai, to detect GenAI text and images, and to identify fake profiles.