After a turbulent few months at Microsoft – marked by major layoffs in May and July and the cancellation of several gaming projects from its Activision Blizzard acquisition – Cyabra conducted a three-month investigation into online conversations about the company, analyzing narratives, sentiment, and the profiles driving the discussion.

Here’s what Cyabra uncovered:

TL;DR

- 29% of the profiles discussing Microsoft’s AI layoffs were fake (nearly three times the average rate in online conversations), indicating a coordinated disinformation campaign

- Using sophisticated tactics, fake accounts successfully influenced real users to call for boycott on Microsoft, as the toxic narratives spread reached over 2 million views

- Three primary false narratives were promoted to harm Microsoft’s reputation: the company replacing American workers with immigrants, layoffs aimed to fund AI initiatives, and the company suggesting Copilot AI as emotional support for fired employees

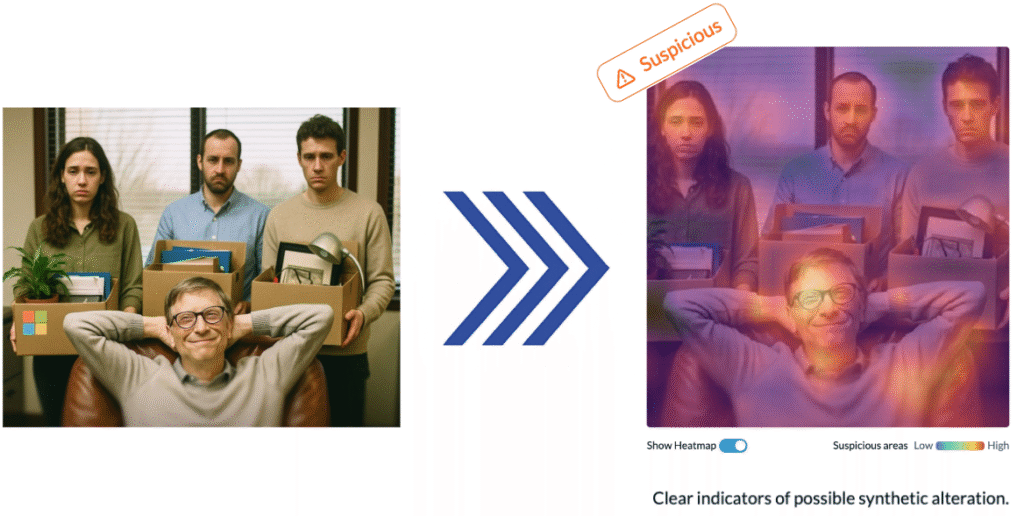

- Nearly 40% of visual content shared by fake profiles was AI-generated, including deepfake images of Microsoft leadership appearing celebratory about layoffs

A Crisis Influenced by Fake Accounts

Disinformation campaigns pose significant threats to business reputation and stability, even for giants such as Microsoft. Fake news costs companies approximately $39 billion in stock market losses annually, with global financial impact reaching around $78 billion (World Economic Forum report).

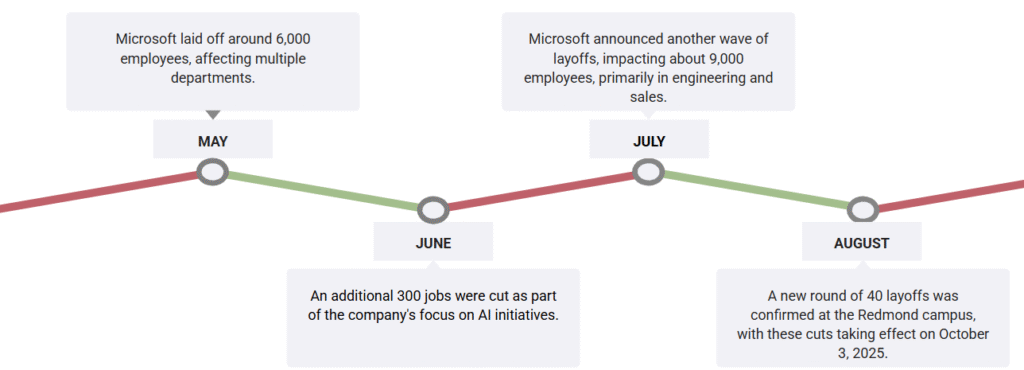

Cyabra investigated online discourse about Microsoft’s AI-driven layoffs between May and August 2025. During this period, Microsoft implemented several rounds of layoffs: 6,000 employees in May, 300 in June, 9,000 in July, and 40 more in August.

Cyabra’s analysis revealed a sophisticated disinformation campaign orchestrated to manipulate public sentiment against the company.

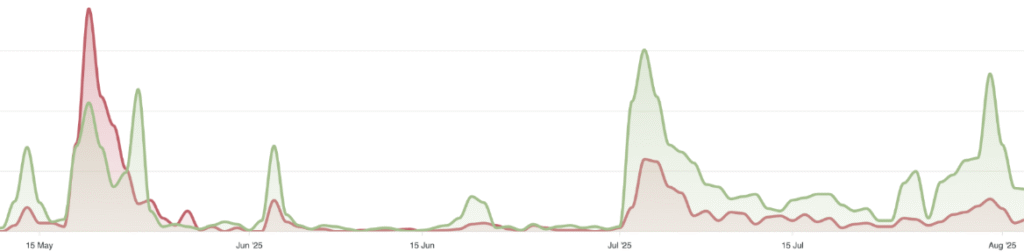

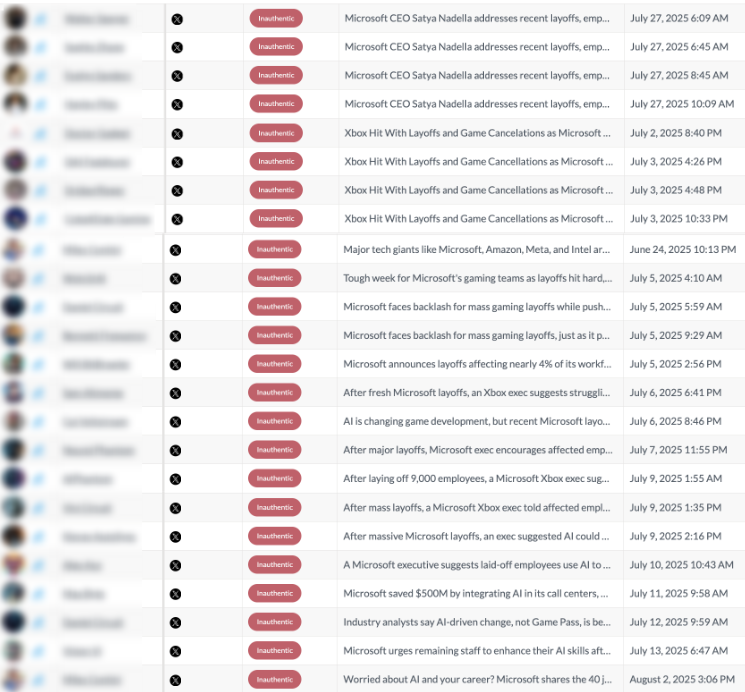

The graph below shows fake profiles’s part in the conversations: after major company events and announcements, fake profiles (red) presence in the discourse massively grew compared to authentic profiles (green).

The scale of inauthentic activity targeting Microsoft was unprecedented. Cyabra found that 29% of analyzed profiles on X were fake – nearly triple the platform’s average. With just 1,256 posts and comments generated, the coordinated fake profiles were able to manipulate the conversation around microsoft, reaching thousands of engagements and hundreds of thousands of potential views.

The timing of the attack was strategic, with peak activity perfectly aligned with Microsoft’s layoff announcements in May, July, and August 2025. This pattern suggests a deliberate campaign to exploit corporate actions and maximize negative sentiment at moments of heightened public attention.

Cyabra’s investigation uncovered clear evidence of coordination: Clusters of inauthentic accounts exhibited highly similar behavioral patterns, including synchronized posting times and identical messaging tactics. Many profiles posted almost identical messages within minutes of each other, indicating a centralized command structure.

Perhaps most concerning was the sophisticated use of AI-generated content and deepfakes. Cyabra discovered that nearly 40% of visual content shared by fake profiles was AI-generated, including deepfakes showing Microsoft executives appearing celebratory about the layoffs. One particularly damaging example showed Bill Gates smiling while laid-off employees appeared in the background. Cyabra’s detection system flagged this image as highly suspicious, showing clear indicators of AI manipulation.

The Spread of False & Toxic Narratives

Fake accounts consistently promoted three harmful narratives designed to damage Microsoft’s reputation:

H1B Visa Replacement Claims: Fake profiles pushed claims that Microsoft was replacing American workers with foreign workers (H1B visa holders), specifically mentioning “9,000 layoffs and 6,300 new visas.” This framed the layoffs as a deliberate policy to replace domestic workers with cheaper foreign labor.

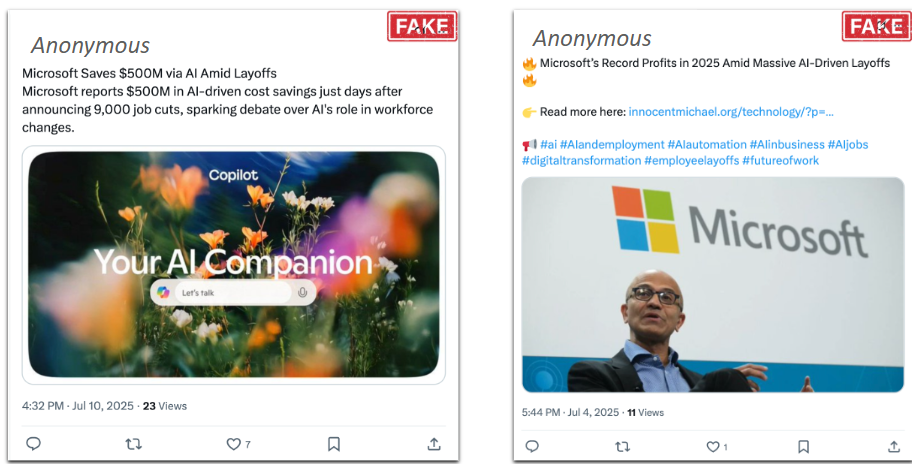

AI Funding Narrative: A second narrative portrayed the layoffs as primarily intended to fund AI initiatives. Posts connected layoffs directly to AI investments, with claims that Microsoft was “saving $500M via AI” while cutting 9,000 jobs. This narrative exploited Microsoft’s record profits to present the mass layoffs as ironic, cruel and greedy.

Copilot’s “Emotional Support“: The most damaging narrative claimed Microsoft suggested laid-off employees use Copilot AI for emotional support, portraying the company as remarkably insensitive. The absurdity of executives supposedly suggesting employees use AI technology – a factor portrayed as contributing to their job loss – as a means to cope with the resulting emotional crisis was particularly harmful for Microsoft’s brand reputation.

The Business Impact

This coordinated campaign successfully influenced authentic users to adopt anti-Microsoft messaging and call for boycotts, as the harmful content reached an overall 2,664,000 potential views – triple the reach of fake accounts alone.

Corporate disinformation campaigns often exploit legitimate company actions to fuel larger controversies. In Microsoft’s case, the layoffs provided a foundation for fake accounts to build narratives that eventually influenced real users to amplify negative sentiment.

How Brands Can Fight Back

For comms and PR teams, crisis communication experts, brand strategists and corporate executives, Microsoft’s experience offers critical lessons in countering disinformation campaigns:

Monitor for Coordinated Activity: Implement tools to detect unusual conversation patterns and inauthentic behavior during sensitive corporate announcements. As Cyabra’s case studies demonstrate, early detection can prevent manufactured narratives from gaining traction.

Implement Deepfake Detection: Deploy technology to identify manipulated images that could damage leadership credibility.

Distinguish Between Manufactured and Authentic Outrage: Develop capabilities to differentiate between coordinated attacks and genuine customer concerns.

Establish Crisis Response Protocols: Create clear plans for addressing disinformation, including dedicated teams ready to respond. Best practice suggests that companies should maintain open communication channels to quickly address false information.

The Microsoft case illustrates the evolving nature of reputation threats in the digital age. The line between authentic criticism and coordinated attacks is increasingly blurred, requiring sophisticated detection tools and response frameworks to protect brand integrity.

Download Cyabra’s full report about the disinformation campaign that surrounded Microsoft