We’re all aware of the presence of fake profiles and bots on social media by now. After all, it seems like every week we hear a new story about them causing harm in one way or another.

But what are bots on social media? From election disinformation to coordinated smear campaigns, their impact cannot be overstated, continuing to wreak havoc on everything from public perception to brand reputation across various industries.

No one can pinpoint the exact number of bots and fake profiles on social media – they can make up 5% to 50% of social media conversations, depending on the topic.

These bots can be created for a multitude of reasons, all of which are malignant in one way or another. Here are the three most common ways they’re being used:

Bots Used to Attack

The most common way bots are being used is to attack. Malicious actors deploy thousands of bots and use them to target their victim, which could be a brand, company, public figure, or an entity in the public sector, in an attempt to discredit their reputation and undermine their credibility.

They use the numbers to their advantage, which helps them create the illusion of widespread opposition, effectively manipulating the public into thinking there’s more backing than there truly is.

This illusion of mass discontent can have serious consequences for both the public and the private sector, fueling confirmation bias in like-minded individuals.

By overwhelming social media platforms with fake posts, likes, and comments, bots create a false sense of momentum that can sway public opinion on highly important topics, which is something we’ve seen happen during the COVID-19 pandemic, where fake profiles were used to promote conflicting narratives about vaccines.

Bots Used to Divert

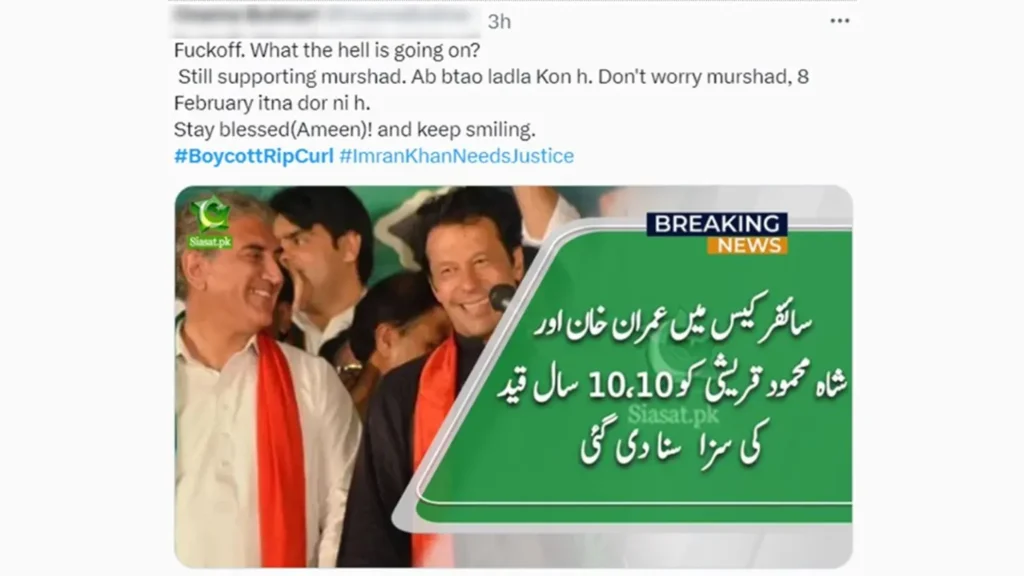

Another tactic employed by malicious actors is the use of fake accounts latching on to trending hashtags to exploit their visibility, even when the hashtag has no connection to their intended message.

This is a relatively new strategy that focuses on exploiting trending topics for exposure rather than blending into the conversation, which allows fake accounts to “hijack” hashtags.

One recent case involved the Rip Curl Boycott, where fake accounts, who had no genuine interest in the conversation, latched on to the hashtag as a means of promoting their cause.

Bots Used to Repel

As opposed to attacking bots, which aim to discredit or harm a target, repelling bots are used to counteract negative narratives by artificially boosting positive content or silencing criticism.

While both types oversaturate social media with posts, the objective of repelling bots is more challenging, as spreading positive messages is generally more difficult.

A notable example of this occurred during the World Cup in Quatar in 2022, when fake profiles were used to drown out the widespread criticism and controversy surrounding the event, including serious human rights violations and the tragic deaths of over 6,500 workers.

Appart from high-profile events like the World Cup, the use of repelling bots is particularly prevalent in political spheres as a means to maintain an appearance of widespread support, even when a political candidate or a policy isn’t as popular as it may seem from skimming through social media interactions.

How Cyabra Can Help

While these three reasons are just the tip of the iceberg when it comes to the various situations in which bots can be deployed, they all share a common thread: manipulation.

Threat actors use manipulation tactics to shift the public discourse in whichever way they see fit, which highlights the need for detection and prevention tools.

Cyabra’s platform uses a combination of machine learning and artificial intelligence not only to detect, but identify and mitigate the impact of fake accounts using hundreds of different parameters, helping to protect both the public and the private sector.

Watch the video summary: