When Target announced plans to roll back its DEI (Diversity, Equity, and Inclusion) initiatives, the backlash was swift. Hashtags like #BoycottTarget and #CancelTarget spread like wildfire, stock prices dropped, and online outrage intensified. But the viral boycott wasn’t driven only by consumer anger; it was strategically amplified by fake profiles.

Cyabra conducted an extensive investigation into the Target backlash between January and June, revealing that more than a quarter of the conversation was inauthentic. Fake accounts played a central role in shaping the narrative, fueling outrage, and driving mobilization

TL;DR?

- 27% of accounts discussing Target between January 1st and April 21st were fake, producing 1,037 posts and comments that helped fuel the backlash.

- Those posts gained 2,262 engagements and reached a potential 3 million views.

- Negative sentiment spiked by 764% on January 25th, the day after Target announced plans to roll back its DEI initiatives.

- Target’s stock plummeted by the end of February, erasing about $12.4 billion in market value.

- In a follow-up analysis, 39% of accounts posting about Target between May 27th and June 3rd were still fake, showing long-term coordination.

A Backlash Engineered by Bots

Following Target’s announcement of its DEI rollback, Cyabra tracked related discussions across social media platforms, and found that the anti-DEI backlash didn’t spread organically: Inauthentic accounts began posting in sync the day after Target’s announcement, causing a 764% surge in negative sentiment.

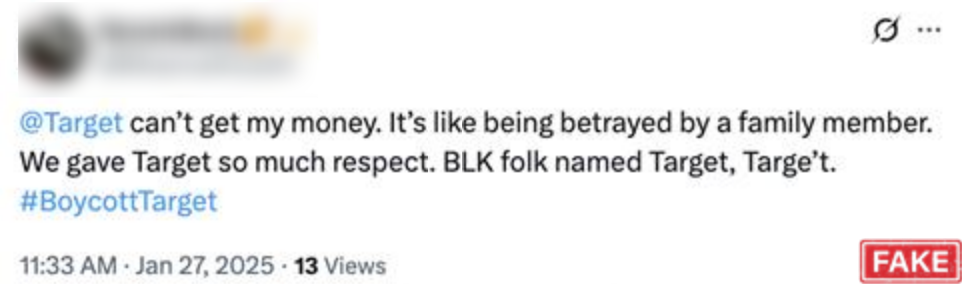

Many of these fake profiles posed as disillusioned black consumers, claiming Target’s decision was a betrayal and calling for economic retaliation. Posts urged a 40-day “Target Fast,” framed as a show of collective power, an effort that mirrored structured campaigns, not spontaneous consumer outrage. This movement was not a single incident: it came following a huge list of boycotts across the past six months, following the Economic Blackout boycotts.

Criticism from Both Sides

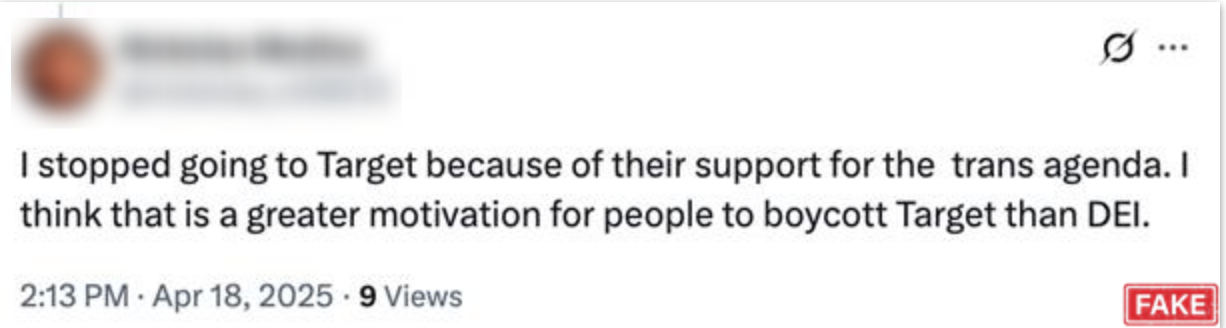

While many of the fake profiles called for a boycott, another cluster of bots presenting as “conservatives” reframed the backlash as “too little, too late,” claiming Target had already alienated them with “woke” policies. This dual amplification strategy deepened the polarization, gained more traction, and undermined Target’s credibility with all audiences.

Even months later, fake profiles remained active. Cyabra’s follow-up analysis from late May to early June found that 39% of profiles still discussing Target were fake, with bots continuing to drive calls for boycotts, protest weeks, and economic retaliation.

When Fake Voices Dominate the Narrative

The role of fake profiles in shaping public discourse and driving the backlash around Target was undeniable. Inauthentic accounts consistently amplified outrage, flooded hashtags, and sustained the narrative weeks after the initial news cycle. By sheer volume, repetition, and emotional messaging, these fake profiles blurred the line between grassroots discontent and algorithmic manipulation.

This isn’t an isolated case. As online discourse becomes increasingly susceptible to manipulation, brands must be able to tell the difference between real feedback and fake amplification. Because when public perception is hijacked by fake profiles, reputations and revenues can suffer real-world consequences.

For brands navigating polarized environments, it’s no longer enough to monitor conversation volume or sentiment alone. It’s about knowing:

- Who is really driving the conversation, and whether they’re human.

- Which narratives are gaining traction organically, and which are being pushed artificially.

- The exact moment a backlash begins to build – so it can be addressed and handled before it explodes.

To learn how Cyabra helps companies uncover the origins of online attacks, identify fake profiles, and protect brand reputation in real time, contact us.

.