By Jessica Grinspoon, Cyabra Team

As we’ve previously learned in the first part of this series, disinformation is information that is false and deliberately spread to inflict harm. In today’s digital age, anyone can create disinformation and accelerate it through the media, but how do we know who is behind each inauthentic post?

In actuality, anyone can create and produce false content. That content can be picked up by anyone and redistributed on social media. Therefore, it’s difficult to uncover a concrete answer.

Typically, disinformation is presented in clusters of false narratives created and spread to deceive for a specific purpose. This action is commonly referred to as a disinformation campaign. A person, community, organization, and even a country can be victims of disinformation campaigns. Those campaigns gain traction by producing polarizing content to manipulate people’s emotions. The content is perpetuated through constant repetition until it successfully blurs the line between truth and falsehood.

Disinformation campaigns can be spread by real people such as dissatisfied employees, competitors, lobby groups, government actors, and commissioned PR companies.

The people generating false narratives are motivated by their own agendas to create public discourse and erode trust. Political and hostile government actors specifically are culprits of spinning stories and generating lies to further their own agendas.

For example, Russian government officials falsely portray Russia as a perpetual victim across many platforms. Critical media content that would contradict this claim is censored and blocked off from Russian civilians.

Disinformation Spreaders

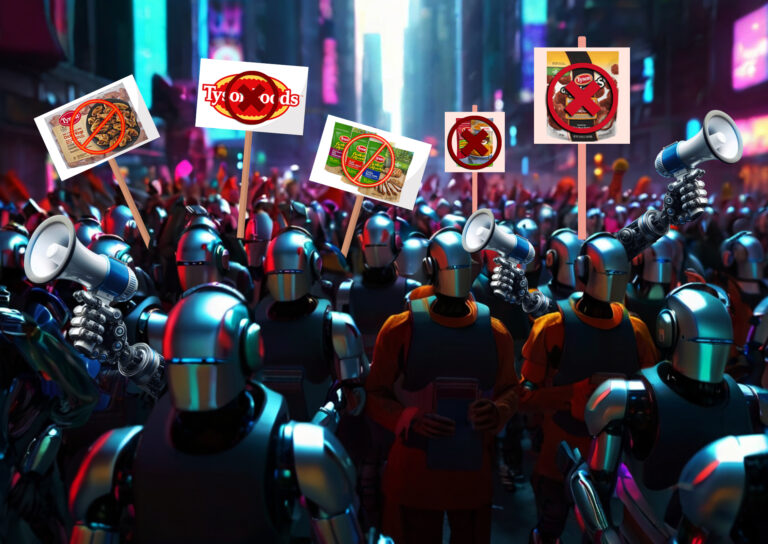

Disinformation is spread by sock puppets, trolls, and automatic bots to alter the public information landscape, overwhelm, and distort a person’s sense of reality. Humans can be paid to generate and redistribute content aligned with the originator’s wishes. They can also construct computer-generated actors to mimic human accounts.

Sock Puppets are fictitious accounts controlled by real people. This type of impersonation is comparable to the common term catfishing. Typically, a single person controls multiple sockpuppet accounts producing the same content on all of them. On the other hand, trolls don’t hide behind a false persona. They use their personal profiles to provoke target accounts and spread deception.

Disinformation intensifies when false information is no longer spread manually. Bots are automated, sleepless accounts programmed to interact with people and spread information in a way that mimics human users. They are cheap and easy to deploy, making their participation in broadcasting content and spreading lies that much more of a threat.

All three inauthentic profiles are used to guide the direction of online conversations and create polarizing conspiracies. The Russian disinformation campaign targeting the United States 2016 presidential election demonstrated how government actors can exploit social media to manipulate audiences. In doing so, bots were leveraged to amplify pro-Trump content, create the illusion of a false consensus, and spread politically biased information.

Now that we have a clear understanding of the bad actors involved, in the next part, we’ll dive deeper into how they manipulate the media by considering the types of content produced and analyzing their effect on society.

Disinformation For Beginners series includes:

- Part 1: Information Types: What is the difference?

- Part 2: Who is Manipulating the Media?

- Part 3: Are You Being Manipulated?

- Part 4: Flattening the Disinformation Curve & Cyabra’s Disinformation Term Log