If you’ve ever seen a video of Tom Cruise doing outlandish things on TikTok, or Morgan Freeman narrating a random person’s Instagram story, the odds are it’s a deepfake.

This technology allows nearly anyone to create highly realistic but fabricated audio, video, or images, making it appear as though someone said or did something they never actually did.

If this technology were used solely for creating funny memes or viral TikTok videos, it wouldn’t be a cause for concern. Unfortunately, malicious actors are exploiting deepfakes to spread disinformation, generate fake news, and undermine public trust.

How Exactly Do Deepfakes Work?

As opposed to using tools such as photoshop to manually make changes in an image, a deepfake allows people to automatically alter or generate content using AI. This technology relies on machine learning algorithms, which, in essence, mimic how our brains learn new information. Just as we learn new things by consistently being exposed to them, machine learning allows AI to strengthen or weaken connections between artificial “neurons” based on patterns in the data it processes.

For example, when we learn to recognize a cat, we don’t memorize every possible cat image, but instead focus on key features like pointed ears, whiskers, and a particular body shape. Similarly, a deep learning network trained on thousands of cat images will learn to identify these characteristic features, which allows it to recognize and replicate cats in new images.

Using the same principle, after being trained on numerous images and videos of a specific person, AI learns to recognize and replicate their unique facial features, expressions, and mannerisms.

The Implications of This Technology

“The real problem of humanity is the following: we have Paleolithic emotions, medieval institutions, and god-like technology.” – Edward O. Wilson, biologist and naturalist

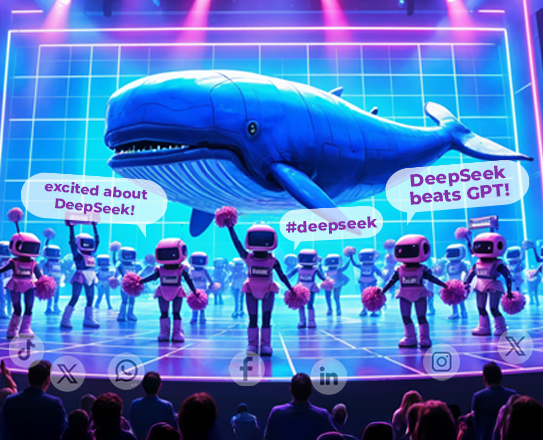

As with most technological advancements, individuals with bad intentions quickly realized that they could use this powerful tool to wreak havoc and cause disruption. The ability to create highly convincing fake videos, audio, or images opened up a Pandora’s box for those who seek to spread disinformation and fake news on social media.

While deepfakes originate online or digitally, their impact can extend far beyond the virtual realm, potentially causing massive real-world consequences. A “well timed” deepfake could influence elections, manipulate financial markets, damage reputations of individuals or brands, or even incite violence.

In 2022, an anonymous user posted a video of Ukrainian president Volodymyr Zelenskyy telling his soldiers to surrender to Russia. In the video, a digital replica of Zelenskyy appeared to be addressing the Ukrainian military, urging them to lay down their weapons and give up the fight against Russian forces.

The deepfake Zelenskyy spoke directly to the camera, his voice and mannerisms seemingly authentic, before ending the video by proclaiming “My advice to you is to lay down arms and return to your families. It is not worth it dying in this war. My advice to you is to live. I am going to do the same.” As you might expect, this video went viral, gaining tens of millions of views in a short amount of time, before being taken down by various social media platforms it had been posted on.

Unfortunately, the impact of this technology is far greater than simply altering what a person says – it ultimately leads us to question the authenticity of everything we see and hear. As the public becomes more aware of deepfakes, there’s a growing skepticism towards all digital content, whether it be from reliable or questionable sources. It also allows people to use deepfakes as an “excuse” of sorts, dismissing genuine evidence of their misconduct as fake – a phenomenon which law professors Bobby Chesney and Danielle Citron call the “liar’s dividend”.

Telltale Signs of a Deepfake

As the technology improves, spotting a deepfake will become increasingly difficult, as proven by a 2023 study conducted by researchers from the University of Amsterdam and the Max Planck Institute for Human Development. The study found that participants were only able to correctly identify deepfake videos 57.6% of the time, just slightly better than chance, yet they were overconfident in their ability to do so.

Even though the results of the study paint a concerning picture, they also highlight the importance of education and awareness in combating this ever evolving threat. Moreover, being aware of the common telltale signs, even if they’re becoming more subtle, can help you approach online videos and images with a healthy dose of skepticism.

Here are the 5 telltale signs of a deepfake:

Uncanny Eye Movement: This is the first red flag you should look out for when figuring out if a video is authentic or not. A common issue with deepfake algorithms is that they are trained on images where the subject’s eyes are open, so the AI may not learn how to replicate natural blinking patterns correctly, which results in unnatural blinking. Also, a person’s gaze might feel unnatural or “off” compared to real video footage.

Unnatural Facial Movement: We’re biologically wired to instantly tell if something is wrong with a person’s face. If something looks iffy at first glance, chances are you’re looking at a deepfake.

Glitchy Body Movement: When a person in a deepfaked video turns to the side or makes sudden movements, the whole video begins to appear distorted and unnatural.

Hair Doesn’t Look Real: Rendering each strand of hair is demanding – even for the most powerful computers in the world – leading to deepfakes often “meshing” multiple strands of hair into a single, unnatural clump, which results in unnaturally smooth-looking and blurry hair on the subject.

Low Resolution: Similar to what happens when rendering hair, deepfake videos struggle to maintain high-definition details, resulting in low resolutions and pixelation. Complex textures, like skin or fabric, may appear blurry or lack sharpness, especially around the edges of the face or where there is significant movement.

Cyabra: Protecting Your Brand

The large amount of dis- and misinformation on social media platforms highlights the importance of safeguarding your brand from malicious actors.

Using advanced AI and ML algorithms, Cyabra can analyze discourse related to your brand or company, track bot networks and fake accounts, and map the spread of disinformation.