TL;DR?

Disinformation campaigns are increasingly targeting brands, using coordinated fake profiles and false narratives to distort public perception and damage trust.

This article, based on a talk given at the recent Meltwater Summit by Cyabra’s CEO, Dan Brahmy and Edelman’s Global Head of Digital Crisis, Dave Fleet, outlines how companies can detect these threats early and respond effectively to protect their reputation.

The Cheap Cost of Chaos

10 dollars.

That’s all it takes to create a fake crisis. To spread false narratives aimed at any company, whether it’s a Fortune 500 giant or an SMB. To irreversibly damage a brand’s reputation.

$10 is enough to purchase 50,000 fake views, 20,000 fake likes, and 500 fake comments.

Those engagements, together with a few well-placed messages, are enough to manufacture momentum, distort reality, and damage reputations at scale. And the consequences of this effortlessly manufactured attack can be very hard to recover from.

The New Crisis Reality

Some brands still see disinformation, misinformation, and fake news as their government’s problem, unrelated to them or to their online reputation. At the same time, they find themselves facing issues and crises that started overnight, trending negative hashtags that seem to have come out of nowhere, and huge communities that seem to have a personal vendetta against them for no reason.

Many of those occurrences might actually be fake.

It is precisely the ease and simplicity of creating and harnessing a fake campaign to a malicious actor’s needs that have made those threats more present than ever for companies of every size and field, from fashion to media and entertainment, from retail and consumer goods to fast food, from healthcare to gaming.

In today’s chaotic information ecosystem, the lines between real and fake have blurred so much that they are often indistinguishable. For brands, that creates a new threat: conversations, trends and crises that appear organic – but are anything but.

Why Dis/Misinformation – and Why Now?

No one disagrees that digital spaces have become a fertile ground for fake news, falsehoods and manipulated narratives.

“Why now” is a bigger question, because it’s actually not one factor that made those threats so huge and effective – it’s measurable factors that have made the ground fertile for disinformation:

- Weaponization of geopolitical volatility – bad actors monetize division and exploit politically charged topics to increase polarization, amplify tension, and sow distrust, causing political issues to cause huge ripples in the business world.

- Swinging pendulum of society – Increased tribal and binary positions on key issues (such as DEI, climate change, stakeholder capitalism), taking advantage of cultural shifts.

- Algorithm-fuelled echo chambers – Shifts in media consumption habits, tailored versions of truth, and social media platforms’ move away from moderation.

- Declining trust in institutions and the rise of internet research – Edelman’s research showed that 38% of Gen Z disregard medical advice and prefer the advice of peers or social media. The mass of online information reinforces the illusion that consumers can find anything online, and trust their own desk research over trained and credentialed expertise

- Growing accessibility of generative AI and content creation tools – GenAI engines and other AI tools are regularly utilized by bad actors to make fake narratives, fake profiles and coordinated campaigns appear completely authentic. Edelman’s research showed that 63% of the population has trouble determining the credibility of information

Combined, these trends have created what’s often referred to as a “chaos economy” – where public perception can shift rapidly, often before brands even realize they’re under attack.

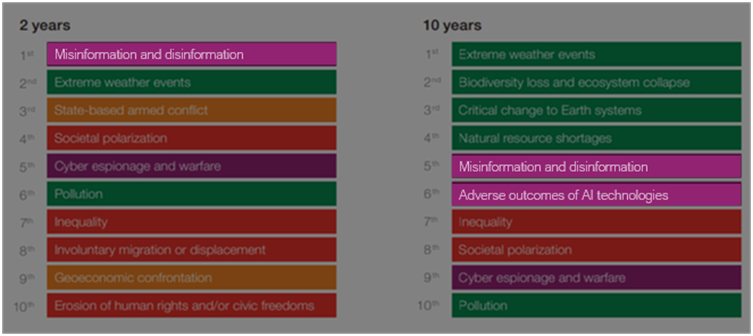

The threat of dis/misinformation: Global risks ranked by severity over time.

Source: World Economic Forum

What Disinformation Looks Like in Practice

In the world of threat intelligence and information warfare, three key components form the structure of every disinformation campaign or social engineering tactic.

This framework is also referred to as the ABCs of Disinformation:

- A – Actors: Fake profiles and coordinated bot networks, created to drive online chaos.

- B – Behavior: The methods that bad actors implement in their campaigns: high-volume posting, hashtag hijacking, coordinated posting across accounts and platforms, integrating into authentic communities, interacting with high-profile influencers, and more.

- C – Content: The content spread in these campaigns, which can be text, images or videos: manipulative narratives that provoke, polarize, and create mistrust, AI-generated images of fake events (e.g. Disney flood, Pentagon explosion) or altered depictions of real people (e.g. Biden in the hospital, Harris in prison), and deepfakes (fake videos used to impersonate real people)

Combined, those elements create a new playing field, in which determining the real from the fake becomes nearly impossible without utilizing smarter, AI-led detection tools.

Why Brands Are Being Targeted

Disinformation isn’t always personal. Sometimes, it’s opportunistic. Brands can become symbols or pawns in larger ideological or societal battles. Other times, they’re targeted for economic impact – triggering boycotts, shareholder panic, or executive impersonation campaigns.

Here are some of the most effective types of attacks on brand, as witnessed by Cyabra:

- Brand reputation attacks

- Impersonation

- Societal Issues

- Deepfakes

- Mis/disinformation

- Stock market manipulation

- Executive defamation

These are not isolated incidents. They’re part of a growing pattern – and they’re becoming harder to detect with the naked eye.

Four Principles for an Effective Response

To stay ahead, brands need more than a crisis comms playbook. They need a disinformation response strategy. Here are four essential principles based on Cyabra’s and Edelman’s research:

- Know your vulnerabilities

Map the threats you’re most exposed to and track emerging risks. - Build resilience in advance

Communicating early and proactively with your teams, your stakeholders and your customers can prevent false narratives from taking hold. - Prepare for escalation

Set clear triggers for when to escalate internally and externally. - Work cross-functionally

Disinformation responses require coordination across comms, security, legal, and leadership.

What You Can Do Today

When perception is shaped by fake engagement, reputations are at risk, and brand trust becomes fragile. Protecting it requires new tools, faster detection, and a deeper understanding of how narratives form, spread, and escalate – as well as who drives them, and how they are operating.

Here are three simple action items you can take today:

- Understand your narrative risk landscape: Audit your brand’s current exposure to manipulated narratives

- Know – and practice – how you will respond to a mis/disinformation issue: Train teams to recognize the signals of coordinated digital behavior

- Begin building resilience now, not after the issue hits: Establish an early warning system for emerging disinformation threats, and use monitoring tools that measure authenticity – not just volume or sentiment

__________________

“Manipulated Narratives: Navigating the Reputational Maze of Misinformation” was given as a talk during a joint session at the 2025 Meltwater Summit in New York by Dan Brahmy, CEO of Cyabra, and Dave Fleet, Global Head of Digital Crisis at Edelman.

Watch the full session: