TL;DR

- The “We Ain’t Buying It” movement targeted Amazon, Target, Home Depot, and other retailers, urging consumers to boycott shopping at these companies.

- The boycott, which cited the companies’ rollback of DEI initiatives, was supported by major influencers who amplified the message.

- The online campaign was strategically timed for November 2025, targeting the Black Friday to Cyber Monday period to maximize pressure on brands.

- Negativity quickly escalated online, reaching a shocking 95% of online conversations.

- A massive 37% of profiles discussing the holiday boycott were fake, and directly attacked corporate giants to hard brand reputation.

The Fake Campaign That Hijacked a Real Consumer Movement

In November 2025, a grassroots consumer movement urging Americans to boycott major retailers emerged just before the critical holiday shopping season. The “We Ain’t Buying It” campaign targeted companies such as Amazon, Target, Home Depot, Walmart, Ford, McDonald’s, Jack Daniels, Toyota, and many others, accusing corporate giants of abandoning their diversity commitments and changing their DEI policies at the beginning of 2025.

Cyabra’s analysis of Holiday Boycott conversations between November 1 and 16 found that while authentic influencers triggered the boycott, its online presence was and still is significantly amplified by inauthentic accounts, creating a perception of widespread consumer outrage.

The timing of this fake campaign was strategic, latching onto the boycott as it targeted the year’s most profitable shopping period, Black Friday and Cyber Monday, to maximize pressure on retailers during their most critical sales days.

The Numbers Behind the DEI Boycott Manipulation

- The grassroot movement started across platforms, as authentic consumers voiced anger, disappointment, and a sense of betrayal, often calling for economic action even before a formal campaign existed

- The “We Ain’t Buying It” campaign officially launched November 10, 2025, with earliest posts traced to influencer LaTosha Brown, co-founder of Black Voters Matter

- 95% of the profiles in holiday shopping discourse spread negative messaging, promoting divisive and antagonistic content. “We Ain’t Buying It” was repeated in many posts supporting the movement

- 37% of profiles discussing the boycott were identified as fake – far exceeding the typical 7-10% baseline in most brand conversations, showing a clear intent to damage brand reputation

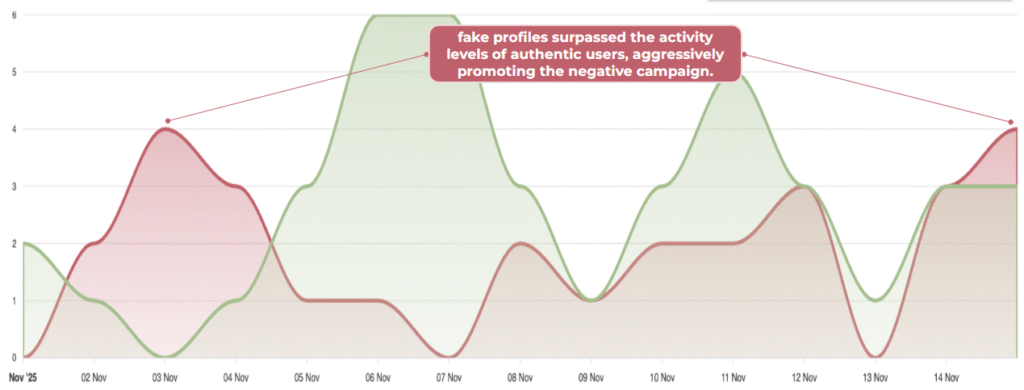

- Fake profiles were most prevalent on X, representing 22% of the accounts in the discourse. On several days, fake accounts actually surpassed authentic user activity levels in promoting the boycott

This extraordinary level of inauthentic activity distorts the movement’s actual size and consumer sentiment, creating a major challenge for corporates’ communications teams trying to understand the size of the resist appropriate responses.

Below: Latosha Brown’s post, which had a huge impact in spreading the boycott calls. Brown continued to spread content supporting the boycott throughout November and December.

Manufacturing a Movement: Coordinated Tactics Revealed

LaTosha Brown’s post quickly became a dominant narrative across various platforms. Within hours, fake accounts began amplifying the message using nearly identical language, creating a centralized campaign with:

- Coordinated traffic to the campaign’s website (weaintbuyingit.com)

- Synchronized cross-platform messaging: identical posts by multiple accounts, all posting at the same time across different platforms

- Strategic blending of fake accounts with genuine users to create false impressions of widespread support

- Echoing influencers’ messages: not just LaTosha Brown, but also Al Sharpton’s announcement, all the way back on January 21, calling to boycott of companies that eliminate DEI programs

While the authentic boycott was precisely timed to coincide with the Black Friday to Cyber Monday shopping period (maximizing both symbolic and financial impact when brands are most vulnerable and visible), the coordinated fake campaign transformed this authentic criticism into an even bigger movement. November 3 and 14 were the strongest days for the fake campaign, during which its activity was significantly higher and generated more reach than that of real profiles participating in the movement.

Why Communications Teams Need Authenticity Intelligence

For communications professionals, the “We Ain’t Buying It” boycott demonstrates how modern brand threats operate.

Traditional media monitoring and social listening tools would completely miss that 37% of accounts driving the boycott narrative were inauthentic. Without authenticity analysis, communications teams risk:

- Overreacting to manufactured controversies

- Misallocating crisis response resources

- Making concessions to pressure that doesn’t represent genuine consumers’ concerns

Authenticity intelligence reveals the actual scale of authentic concern versus artificial amplification, providing the crucial dimension for effective response strategies.

Protecting Your Brand Against Manufactured Boycotts

For communications professionals navigating this landscape, Cyabra’s analysis suggests several strategic approaches:

- Implement social listening tools that identify coordinated inauthentic behavior

– Look beyond raw engagement numbers to analyze interaction authenticity

– Monitor for synchronized posting patterns and identical messaging

- Distinguish between artificial amplification and genuine sentiment

– Assess the proportion of authentic vs. inauthentic accounts in the conversation

– Evaluate whether negative sentiment is broadly distributed or concentrated

- Develop proportional response strategies

– Consider the true scale of authentic criticism when determining response level

– Focus on addressing substantive concerns raised by verified stakeholders and consumers

Communications teams should watch for inauthentic profile indicators: limited personal information, repetitive posts, unusually high activity rates, and coordinated engagement patterns.

By taking a measured, data-driven approach to assessing boycott campaigns, brands can avoid both underreacting to legitimate consumer concerns and overreacting to artificially amplified narratives. To learn more about how retailers can identify and respond to those threats, contact Cyabra.

Download the full report by Cyabra

Lessons From the DEI Boycott: Test Your Crisis Response

Most social listening tools track what’s being said, but not who’s saying it. Without authenticity analysis, you can’t distinguish between a verified customer with a legitimate complaint and a fake account created to damage your brand. Look beyond engagement metrics to examine profile authenticity: account age, posting patterns, profile completeness, and interaction history. In the “We Ain’t Buying It” case, companies relying solely on volume metrics would have seen overwhelming negativity without realizing that 37% of voices weren’t real consumers at all.

1. Evaluate timing: Be especially vigilant of boycotts launched during critical business periods

2. Monitor for coordination patterns: Watch for synchronized messaging and suspicious timing of boycott calls

3. Deploy authenticity analysis before responding: Determine the true scale of genuine consumer concern vs. artificial amplification

Using those methods would enable brands to respond appropriately without inadvertently amplifying manufactured controversies.

Start by separating the noise from genuine concern. If 37% of accounts are fake (as in the “We Ain’t Buying It” boycott), your actual consumer sentiment challenge is significantly smaller than it appears. Before issuing statements, making policy changes, or allocating crisis resources, measure the authenticity ratio in the conversation. A boycott that looks like 100,000 angry voices might actually represent 63,000 real people, and potentially far fewer who are actual customers. Proportional response means addressing legitimate concerns from verified stakeholders while avoiding unnecessary concessions to manufactured pressure that doesn’t reflect your true customer base.

Look for red flags: newly created accounts, minimal profile information, unusually high posting frequency, and repetitive messaging. Real customers typically have varied content histories, personal information, and authentic engagement patterns. Fake accounts often post identical or near-identical content, operate in coordinated bursts, and lack the organic interaction patterns of genuine users. In sophisticated campaigns, fake accounts may blend with real voices, making manual detection nearly impossible. This is where AI-powered authenticity tools become essential.