It’s 2 AM, and your corporate communications team wakes up to hundreds of negative mentions. They have no idea how this crisis started or where it’s coming from. Unfortunately, this nightmare scenario has become increasingly common in recent years. While social media teams rely on social listening tools to track brand mentions, sentiment, and engagement, these traditional tools are increasingly failing to provide the insights needed to manage reputational risks effectively.

Here’s where your toolkit for monitoring, analyzing, and understanding social media is failing you.

TL;DR?

- Fake profiles change the game: Fake profiles are now part of every social media discourse. While in the past, coordinated fake campaigns were directed at major global issues such as elections or wars, this reality has changed.

- Their methods are perfected to harm brands: Those inauthentic accounts are latching onto false and negative narratives and amplifying them, spreading disinformation to cause confusion, rage and mistrust.

- Traditional listening tools can’t distinguish between a coordinated attack and genuine consumer discontent: Your team may be unaware that the negativity surrounding your brand is often driven by fake profiles. Without full context, traditional social listening offers an incomplete picture – leading to ineffective responses that can actually escalate the crisis.

The Blind Spots in Traditional Social Listening

Analyzing social media discourse the traditional way involves tracking mentions and keywords and analyzing communities, narratives, demographics, and sentiment. However, those tools will never tell the full story because they don’t tell you whether a crisis is authentic or artificially amplified by bots and fake profiles. They also lack the ability to differentiate between real consumer concerns and orchestrated disinformation campaigns.

While fake accounts usually account for 5% to 8% of every online conversation, during an issue or a crisis, this number can jump to 15%, 20%, and even 40% of the discourse.

Fake profiles are always created with an agenda: they could be foreign state actors trying to harm the economy, a radical movement using them to spread its message, or even a competitor or a disgruntled client. While the agenda can vary, the result is the same: fake profiles massively push misleading and toxic narratives, harmful hashtags, controversy, and divisive influencers’ content. By doing that, they are artificially inflating negative sentiment, amplifying confusion, rage, and mistrust.

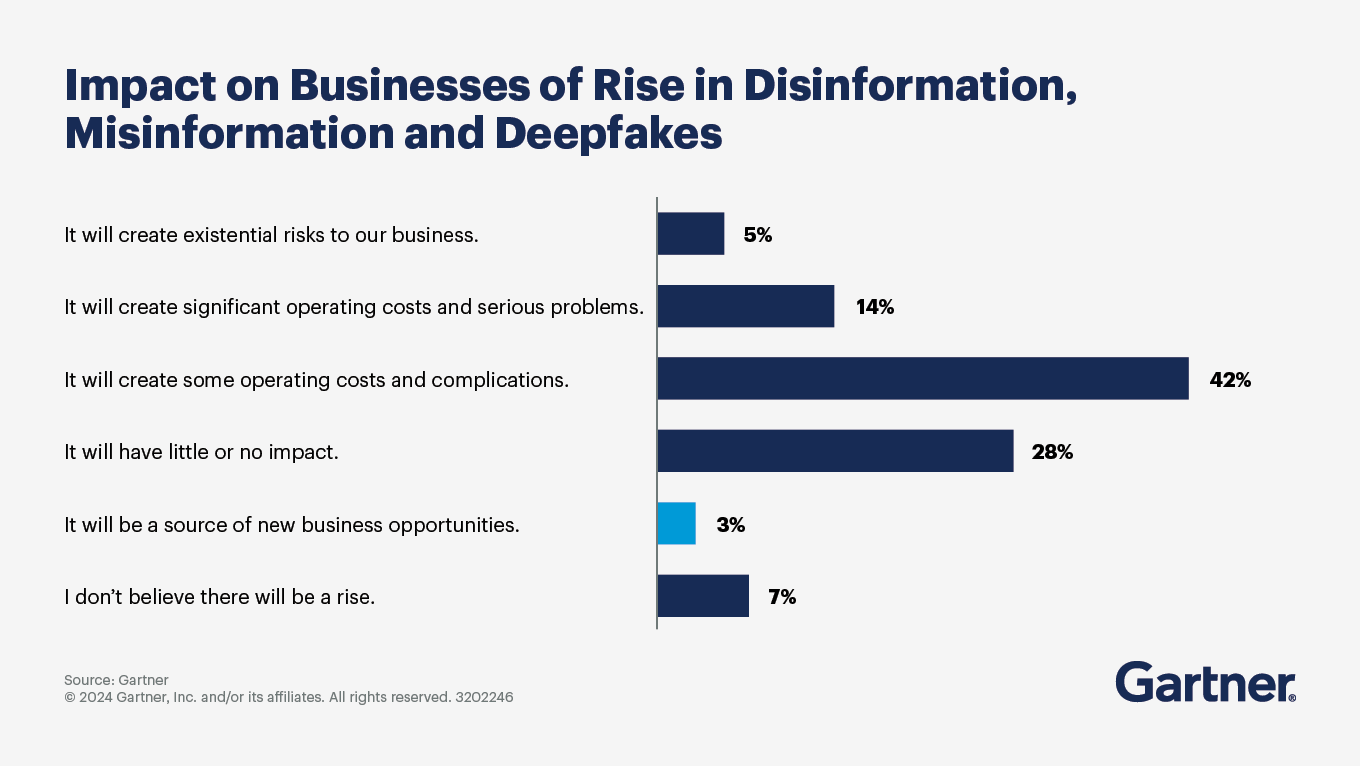

In recent years, Cyabra has analyzed hundreds of crises and issues, and witnessed a gradually increasing presence of fake profiles in online conversations, which in turn brought an increasing impact on public discourse. Those tactics, also known as Brand Disinformation, have resulted in huge financial losses and irreparable damage to the reputation of major companies. Many of those fake campaigns and coordinated attacks have weaponized AI and GenAI tools, making both their content and their profile appear more authentic and making their manipulations more effective.

This is the reality for brands today: You may be tracking social media conversations, but are you tracking the real ones?

The Problem: Why Social Listening Alone Doesn’t Cut It

1. Negative Engagement Isn’t Always Real

Traditional tools treat all interactions equally, assuming that every comment, share, and like comes from an authentic user. But in reality, bots and coordinated disinformation networks can hijack conversations, and what starts as a minor issue could quickly turn into a full-blown catastrophe.

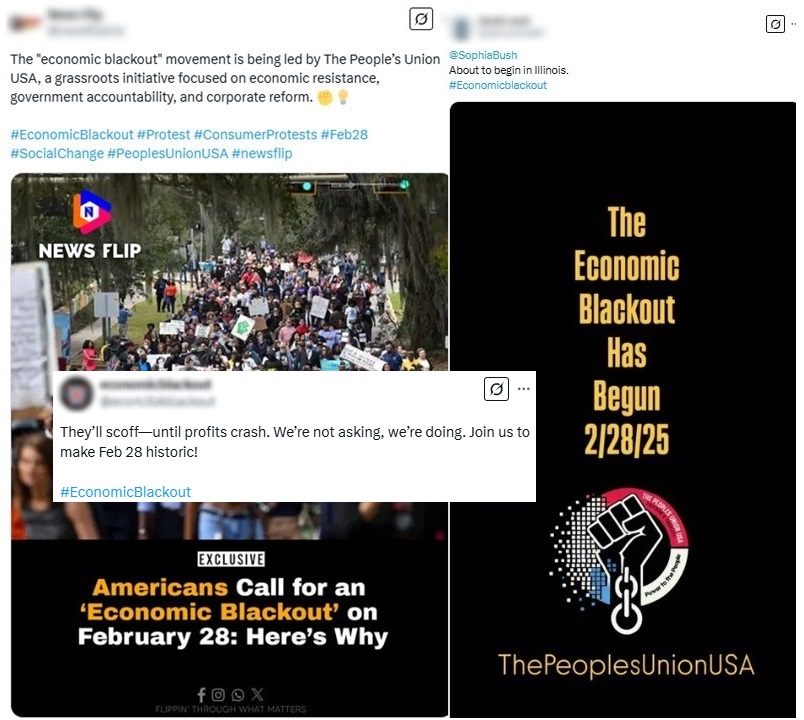

Example: The Economic Blackout: A recent study by Cyabra analyzed social media discourse surrounding an initiative created by an activist group that declared Friday, February 28 a “No Spending” day, urging consumers to avoid shopping – especially from major corporations like Amazon, Walmart, Target, and Best Buy. While traditional social listening tools classified this crisis as authentic consumer protest, Cyabra uncovered that this discourse was actually massively hijacked by fake profiles, which amplified #EconomicBlackout and spread content that reached 5,328,000+ views!

2. You React Without Having the Full Picture

Most social listening tools operate after something has gone viral. By the time a narrative gains traction, PR teams are scrambling for damage control. Without real-time narrative tracking, you’re always playing defense – responding after misinformation has already shaped public perception. But what’s worse is that your response might be ironically amplifying the crisis: your customer support team could be unknowingly interacting with fake profiles, increasing their engagement and exposure, instead of reporting them.

3. You’re Drowning in the Noise

Traditional sentiment analysis is often too simplistic: it fails to see the difference between real and fake and understand the full picture. Once the noise of fake profiles has been cleared out, smarter, informed decision-making can be enabled. While you ignore, monitor and report the coordinated attack, clearing out the noise can help you identify positive narratives, communities and influencers, understand what to focus on, and amplify positive content.

The Solution: Moving Beyond Listening

Brands need to stop relying solely on traditional social listening tools and start levering smarter solutions that utilize AI for good:

- Authenticity Analysis: Instead of tracking volume alone, Cyabra’s AI identifies fake profiles, bot activity, and coordinated campaigns, so you know which discourse to address and which to ignore.

- Real-Time Alerts: AI-driven alerts detect emerging threats before they go viral, giving your team a crucial window to act. Sleep easy and let those tools work for you, and focus your efforts where they truly matter.

- Contextual Prioritization: clearing out the noise helps determine which narratives have real impact and which are artificially inflated, and even improve your growth by allowing you to identify authentic, positive influencers, communities and narratives.

Conclusion: The Future of Brand Protection

The social media landscape has changed, and traditional social listening tools are no longer enough. The next era of corporate communications requires AI-driven authenticity detection, real-time threat alerts, and contextual intelligence that presents the full picture of social media discourse.The threat of brand disinformation will continue to grow. Avoiding controversial topics won’t save brands from the next crisis. If they want to stay ahead and defend their reputation, they must be proactive, and utilize solutions that don’t just listen but monitor, detect, analyze, alert, and enable informed decision-making and reaction.