Following Amazon’s announcement of rolling back the company’s DEI initiatives, Cyabra conducted a 6-months research into the viral backlash and boycott around Amazon, and found that fake accounts were central to the narrative, posing as grassroots activists, amplifying boycott hashtags, and flooding feeds with service complaints. These inauthentic profiles helped turn a policy decision into a PR crisis. Here’s what Cyabra uncovered:

TL;DR?

- 35% of accounts discussing Amazon from January to June 2025 were fake, and fueled the boycott, using hashtags #BoycottAmazon and #EconomicBlackout, to spread thousands of posts, reaching 1.7 million potential views.

- Fake activity spiked by 905% on February 28 and by 26,500% on April 28, timed with major boycott pushes from the Economic Blackout movement.

- Fake profiles made up 55% of accounts still discussing Amazon in June, long after the official campaign ended. Some are still active to this day, posing as frustrated Amazon customers.

Grassroots in Appearance, Synthetic in Origin

In late December 2024, Amazon announced plans to roll back its DEI (Diversity, Equity, and Inclusion) initiatives. By January, public backlash was gaining traction. By February, it had gone viral. But Cyabra’s investigation reveals that what looked like a widespread consumer revolt was in large parts manufactured:

Over a third of the accounts discussing Amazon from January to June 2025 were fake, producing 1,528 posts and comments fueling the boycott. These inauthentic accounts were active drivers of the conversation, using hashtags like #BoycottAmazon, #AmazonFail, and #EconomicBlackout to align themselves with consumer frustration and anti-corporate narratives.

The largest spike in bot activity occurred on February 28, when the People’s Union’s Economic Blackout campaign called for a nationwide 24-hour boycott of non-essential purchases. Fake profiles mimicked activist voices calling for collective economic power. In the leadup to a second boycott day two months later, the pattern repeated, with another massive surge in fake activity on April 28. During those two surges, fake profiles gained 1,984 engagements and 1,702,000 potential views!

Many of the fake profiles were meticulously designed to look like everyday consumers and community activists. They used slogans like “Power to the People,” posted protest graphics, and pushed time-bound calls to action. The repetition of language, coordinated timing, and rapid amplification across accounts all point to a concerted effort to shape perception and mobilize action.

While not every user supporting the boycott was fake, fake accounts significantly altered the scale, tone, longevity, and virality of the conversation.

Faking Frustration

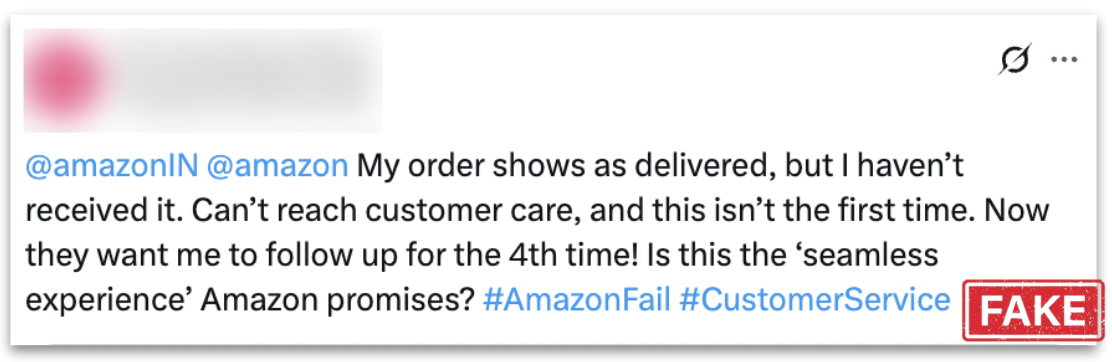

While many inauthentic accounts leaned into the narrative of Amazon’s DEI rollback as the reason to boycott, an additional coordinated subset took a different approach: complaints about bad service.

Using hashtags like #AmazonFail, these bots flooded feeds with gripes about shipping delays, missing refunds, and product issues – many of them duplicated across multiple posts with similar tone and language. By repeating these themes en masse, bots created the illusion of overwhelming consumer dissatisfaction.

And it worked. Cyabra’s analysis shows that on multiple days in June 2025, fake accounts were responsible for more posts about Amazon than authentic users. While the official boycotts had ended, the fake campaign continued, shifting the conversation from DEI to service quality, and keeping Amazon in the crosshairs.

When Manufactured Outrage Drives Market Impact

Amazon’s backlash was not entirely fake, but it was significantly fueled by inauthentic activity. What began as frustration over a corporate policy was escalated by bots, redirected toward broader complaints, and sustained well beyond the initial flashpoint.

This case illustrates how quickly a narrative can be hijacked and how difficult it is for companies to respond when fake voices dominate the conversation.

For brands navigating reputational risk in today’s digital landscape, distinguishing between real customers and synthetic outrage is critical.

To respond effectively, companies must have clear visibility into what is being said, who is saying it, and how it is spreading. That means:

- Monitoring sentiment and discourse patterns in real time to catch issues as they develop

- Distinguishing between real customers and fake accounts, and understanding who is shaping perception

- Knowing when to act, and what kind of message will resonate with authentic audiences

Cyabra’s new AI alerts offer early warnings about suspicious activity and coordinated amplification, so brands can stay ahead of the narrative before it spirals. Cyabra continuously scans billions of online interactions to identify risks across social platforms and news media

Contact Cyabra to learn more about our new AI alerts and to see how Cyabra helps brands uncover inauthentic campaigns, detect bot networks, and respond with clarity – saving your team time and effort by bringing the insights directly to you.

Download the full report by Cyabra

.